How Python is Used to Scrape Amazon Best Sellers Data?

What is Web Scraping?

Web scraping, also known as web content extraction, is a method for automatically extracting huge amounts of data from websites and storing it in a useful way.

Why Should You Perform Web Scraping?

Businesses scrape information from competitors' sites to collect information like articles or prices, popular sales products and come up with a new plan that might help them change their products and earn a profit. Web scraping APIs can be used for a variety of purposes, including market research, artificial intelligence, and big datasets, as well as search engine optimization.

How to Create the Scraping Stuff on Amazon?

Amazon is also known as Amazon.com, which is an American online retailer and cloud computing, provider. It is a massive e-commerce business that sells a huge variety of products. It is among the most widely used e-commerce platforms across the world, allowing users to go online and shop for a variety of things. For instance, electronic gadgets, clothing, and so on.

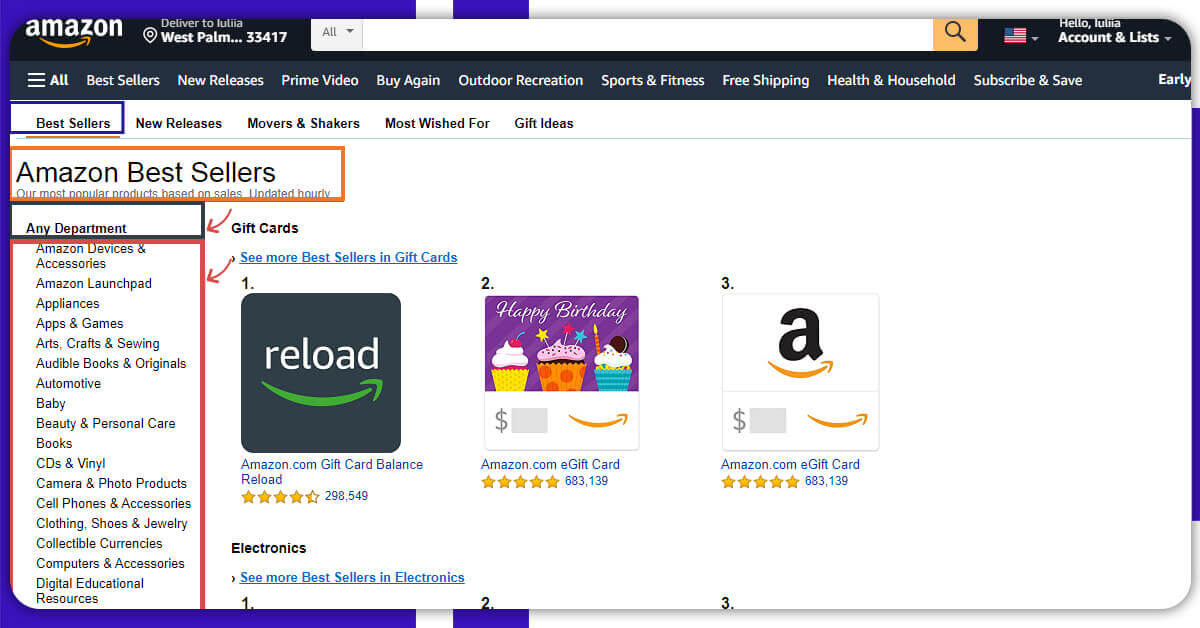

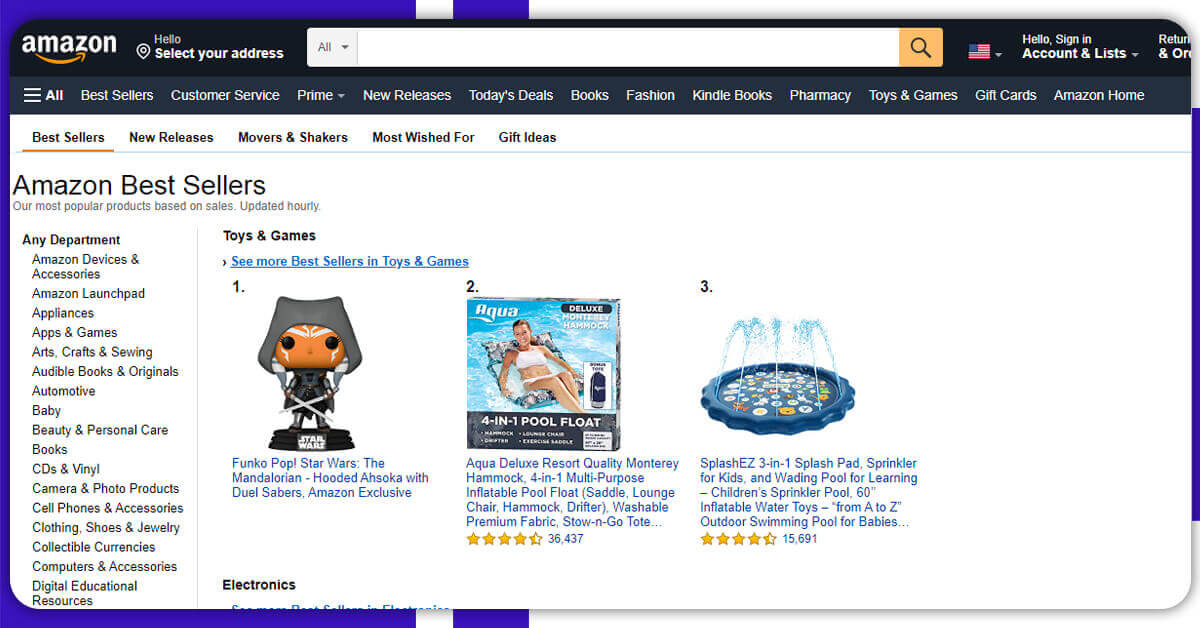

Amazon has arranged the best sellers in alphabetical order in Amazon Best Sellers. The page includes a list of categories that have been reorganized by department (about 40 variety). Using web scraping, we will obtain Amazon’s top seller items in a range of subjects for this project. To do so, we'll utilize the Python libraries request and BeautifulSoup to request, analyze, and retrieve the features you require from the web page.

Here is an overview of the steps as follows:

- Install and import the libraries

- To acquire the item categories and topics URL, collect and analyze the Bestseller HTML page program code using request and BeautifulSoup.

- Step 2 should be repeated for each item topic retrieved via the relevant URL.

- Each page should have information extracted.

- Combine the data you've gathered. In Python Dictionaries, obtain information from every page's data.

- Data should be saved in a CSV file with the use of the Pandas library.

We will develop a CSV file in the below given format.

Topic,Topic_url,Item_description,Rating out of 5,Minimum_price,Maximum_price,Review,Item Url

Amazon Devices & Accessories,

https://www.amazon.com/Best-Sellers/zgbs/amazon-devices/ref=zg_bs_nav_0/131-6756172-7735956,Fire TV Stick 4K streaming device with Alexa Voice Remote | Dolby Vision | 2018 release,4.7,39.9,0.0,615699,

"https://images-na.ssl-images-amazon.com/images/I/51CgKGfMelL._AC_UL200_SR200,200_.jpg"

Amazon Devices & Accessories,

https://www.amazon.com/Best-Sellers/zgbs/amazon-devices/ref=zg_bs_nav_0/131-6756172-7735956,Fire TV Stick (3rd Gen) with Alexa Voice Remote (includes TV controls) | HD streaming device | 2021 release,4.7,39.9,0.0,1844,

"https://images-na.ssl-images-amazon.com/images/I/51KKR5uGn6L._AC_UL200_SR200,200_.jpg"

Amazon Devices & Accessories,

https://www.amazon.com/Best-Sellers/zgbs/amazon-devices/ref=zg_bs_nav_0/131-6756172-7735956,

"Amazon Smart Plug, works with Alexa – A Certified for Humans Device",4.7,24.9,0.0,425090,

"https://images-na.ssl-images-amazon.com/images/I/41uF7hO8FtL._AC_UL200_SR200,200_.jpg"

Amazon Devices & Accessories,

https://www.amazon.com/Best-Sellers/zgbs/amazon-devices/ref=zg_bs_nav_0/131-6756172-7735956,Fire TV Stick Lite with Alexa Voice Remote Lite (no TV controls) | HD streaming device | 2020 release,4.7,29.9,0.0,151007,

"https://images-na.ssl-images-amazon.com/images/I/51Da2Z%2BFTFL._AC_UL200_SR200,200_.jpg"

How to Execute the Code?

You can run the code by selecting "Run on Binder" from the "Run" button at the top of the page. By running the following cells in script, you can make modifications and save your version of the notebook.

Installing and Importing the Libraries that We Will Use

Let us initiate with the required libraries.

Install libraries using the pip command. Import the necessary packages that will be used for extracting the information from the website.

!pip install requests pandas bs4 --upgrade --quiet import requests from bs4 import BeautifulSoup import pandas as pd

Install and Analyze the Bestseller HTML page source code using Python and BeautifulSoup to scrape the item categories topics URL

To download the page, we utilize the get method from the request’s library. A User-Agent header string is defined to allow hosts and network peers to recognize the requesting program, system software, manufacturer, and/or edition. As a scraper, it aids in avoiding discovery.

url ="https://www.amazon.com/Best-Sellers/zgbs/ref=zg_bs_unv_ac_0_ac_1"

HEADERS ={"User-Agent":"Mozilla/5.0 (Windows NT 10.0; Win64; x64; rv:66.0) Gecko/20100101 Firefox/66.0", "Accept-Encoding":"gzip, deflate", "Accept":"text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8", "DNT":"1","Connection":"close", "Upgrade-Insecure-Requests":"1"}

response = requests.get(url, headers=HEADERS)

print(response)

The data from the web page is returned by requests. Get as a response object. To see if the answer was successful, utilize the. Status code property. The HTTP response code for a successful response will be between 200 and 299.

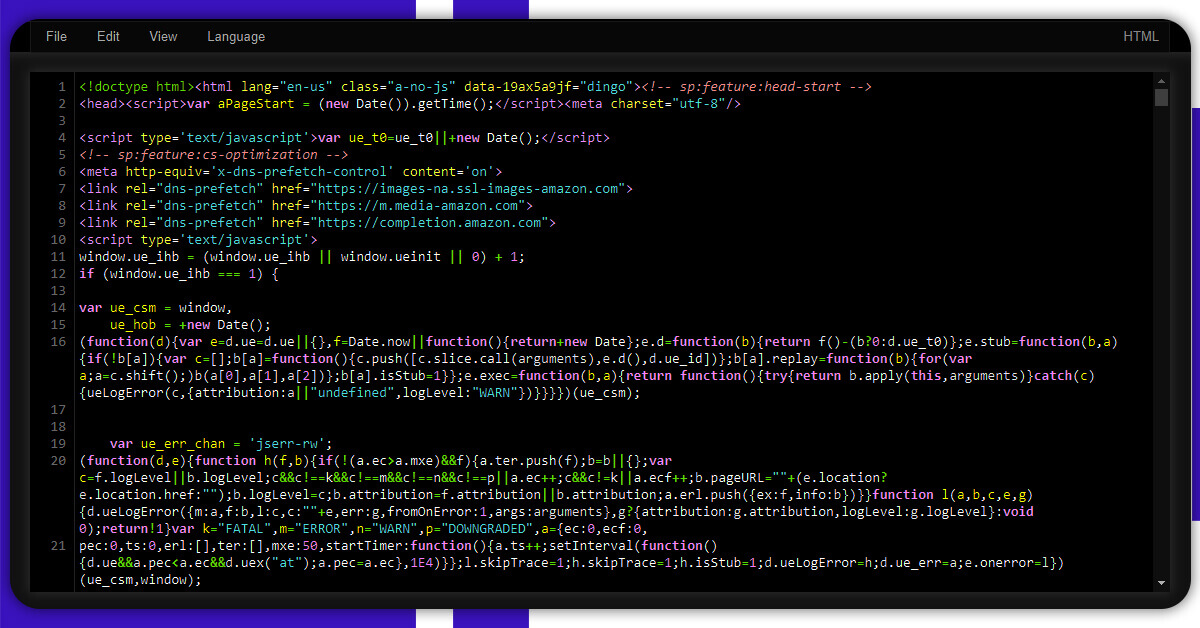

response.text[:500] '<!doctype html> <html lang="en-us" class="a-no-js" data-19ax5a9jf="dingo"> \n <head> <script> var aPageStart = (new Date()).getTime(); </script> <meta charset="utf-8"/>\n\n <script type=\'text/javascript\'> var ue_t0=ue_t0||+new Date(); </script>\n <!-- sp:feature:cs-optimization -->\n <meta http-equiv=\'x-dns-prefetch-control\' content=\'on\'>\n <link rel="dns-prefetch" href="https://images-na.ssl-images-amazon.com">\n <link rel="dns-prefetch" href="https://m.media-amazon.com">\n <link rel="dn">'

We can use response.text to check at the contents of the webpage we just downloaded, and we can also use len (response.text) to verify the length. We only print the first 500 characters of the results pages in this case.

Save the content to a file using the below HTML extension.

with open("bestseller.html","w") as f:

f.write(response.text)

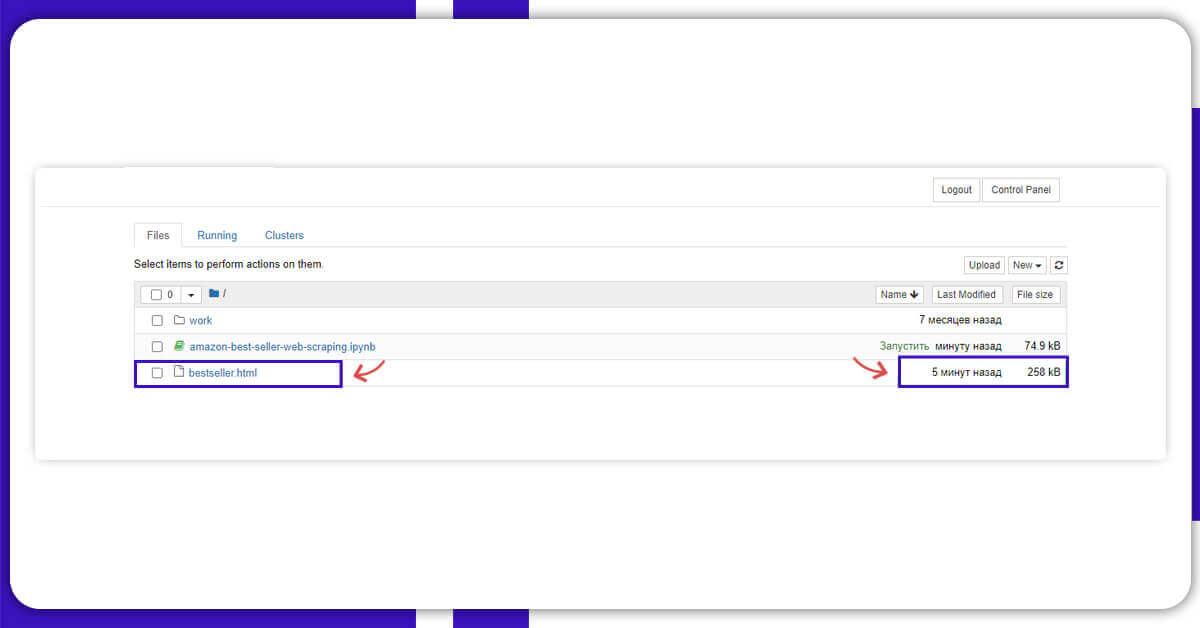

You can view the file with the use of the following code “File>Open” menu and select bestseller.html from the array of the files.

You can also focus on the file size, for the task near to 250kb as file size indicates that there is content in the page that is successfully installed where it has 6.6kB as the file size will install the exact page content. There are various reasons of failure like captcha, or any other security conditions such as the web page request.

Below image shows the file when we click it:

It is not the copy of the web page, as it seems to appear. None of the hyperlinks or button’s function, as you can see. To examine or edit the file's source code, open Jupyter and go to "File > Open," then select bestseller.html from the list and click the "Edit" button.

with open("bestseller.html","r") as f:

html_content = f.read()

html_content[:500]

'<!doctype html>

<html lang="en-us" class="a-no-js" data-19ax5a9jf="dingo">

<!-- sp:feature:head-start -->\n

<head>

<script>

var aPageStart = (new Date()).getTime();

</script>

<meta charset="utf-8"/>\n\n

<script type=\'text/javascript\'>

var ue_t0=ue_t0||+new Date();</script>\n

<!-- sp:feature:cs-optimization -->\n

<meta http-equiv=\'x-dns-prefetch-control\' content=\'on\'>\n

<link rel="dns-prefetch" href="https://images-na.ssl-images-amazon.com">\n

<link rel="dns-prefetch" href="https://m.media-amazon.com">\n

<link rel="dn">'

At the end of the file content, you will see the printing of the 500 characters. Then, BeautifulSoup will assist to parse the web page data and determine the type.

content = BeautifulSoup(html_content,"html.parser") type(content) bs4.BeautifulSoup

Access the parent tag and fetch all the information data tags attributes

doc = content.find("ul",{"id":"zg_browseRoot"})

hearder_link_tags = doc.find_all("li")

We locate a variety of item topics (categories), as well as their URLs and titles, and put them in a dictionary.

############## find and get different item categories description and Url at any department

topics_link = []

for tag in hearder_link_tags[1:]:

# print(tag)

topics_link.append({

"title": tag.text.strip(),

"url": tag.find("a")["href"] })

#################store in dictionary

table_topics = { k:[ d.get(k) for d in topics_link]

for k in set().union(*topics_link)}

Let's print and see all categories topics found on bestseller.

table_topics

{'url': ['https://www.amazon.com/Best-Sellers/zgbs/amazon-devices/ref=zg_bs_nav_0/138-0735230-1420505',

'https://www.amazon.com/Best-Sellers-Amazon-Launchpad/zgbs/boost/ref=zg_bs_nav_0/138-0735230-1420505',

'https://www.amazon.com/Best-Sellers-Appliances/zgbs/appliances/ref=zg_bs_nav_0/138-0735230-1420505',

'https://www.amazon.com/Best-Sellers-Appstore-Android/zgbs/mobile-apps/ref=zg_bs_nav_0/138-0735230-1420505',

'https://www.amazon.com/Best-Sellers-Arts-Crafts-Sewing/zgbs/arts-crafts/ref=zg_bs_nav_0/138-0735230-1420505',

'https://www.amazon.com/Best-Sellers-Audible-Audiobooks/zgbs/audible/ref=zg_bs_nav_0/138-0735230-1420505',

'https://www.amazon.com/Best-Sellers-Automotive/zgbs/automotive/ref=zg_bs_nav_0/138-0735230-1420505',

'https://www.amazon.com/Best-Sellers-Baby/zgbs/baby-products/ref=zg_bs_nav_0/138-0735230-1420505',

'https://www.amazon.com/Best-Sellers-Beauty/zgbs/beauty/ref=zg_bs_nav_0/138-0735230-1420505',

'https://www.amazon.com/best-sellers-books-Amazon/zgbs/books/ref=zg_bs_nav_0/138-0735230-1420505',

'https://www.amazon.com/best-sellers-music-albums/zgbs/music/ref=zg_bs_nav_0/138-0735230-1420505',

'https://www.amazon.com/best-sellers-camera-photo/zgbs/photo/ref=zg_bs_nav_0/138-0735230-1420505',

'https://www.amazon.com/Best-Sellers/zgbs/wireless/ref=zg_bs_nav_0/138-0735230-1420505',

'https://www.amazon.com/Best-Sellers/zgbs/fashion/ref=zg_bs_nav_0/138-0735230-1420505',

'https://www.amazon.com/Best-Sellers-Collectible-Coins/zgbs/coins/ref=zg_bs_nav_0/138-0735230-1420505',

'https://www.amazon.com/Best-Sellers-Computers-Accessories/zgbs/pc/ref=zg_bs_nav_0/138-0735230-1420505',

'https://www.amazon.com/Best-Sellers/zgbs/digital-educational-resources/ref=zg_bs_nav_0/138-0735230-1420505',

'https://www.amazon.com/Best-Sellers-MP3-Downloads/zgbs/dmusic/ref=zg_bs_nav_0/138-0735230-1420505',

'https://www.amazon.com/Best-Sellers-Electronics/zgbs/electronics/ref=zg_bs_nav_0/138-0735230-1420505',

'https://www.amazon.com/Best-Sellers-Entertainment-Collectibles/zgbs/entertainment-collectibles/ref=zg_bs_nav_0/138-0735230-1420505',

'https://www.amazon.com/Best-Sellers-Gift-Cards/zgbs/gift-cards/ref=zg_bs_nav_0/138-0735230-1420505',

'https://www.amazon.com/Best-Sellers-Grocery-Gourmet-Food/zgbs/grocery/ref=zg_bs_nav_0/138-0735230-1420505',

'https://www.amazon.com/Best-Sellers-Handmade/zgbs/handmade/ref=zg_bs_nav_0/138-0735230-1420505',

'https://www.amazon.com/Best-Sellers-Health-Personal-Care/zgbs/hpc/ref=zg_bs_nav_0/138-0735230-1420505',

'https://www.amazon.com/Best-Sellers-Home-Kitchen/zgbs/home-garden/ref=zg_bs_nav_0/138-0735230-1420505',

'https://www.amazon.com/Best-Sellers-Industrial-Scientific/zgbs/industrial/ref=zg_bs_nav_0/138-0735230-1420505',

'https://www.amazon.com/Best-Sellers-Kindle-Store/zgbs/digital-text/ref=zg_bs_nav_0/138-0735230-1420505',

'https://www.amazon.com/Best-Sellers-Kitchen-Dining/zgbs/kitchen/ref=zg_bs_nav_0/138-0735230-1420505',

'https://www.amazon.com/Best-Sellers-Magazines/zgbs/magazines/ref=zg_bs_nav_0/138-0735230-1420505',

'https://www.amazon.com/best-sellers-movies-TV-DVD-Blu-ray/zgbs/movies-tv/ref=zg_bs_nav_0/138-0735230-1420505',

'https://www.amazon.com/Best-Sellers-Musical-Instruments/zgbs/musical-instruments/ref=zg_bs_nav_0/138-0735230-1420505',

'https://www.amazon.com/Best-Sellers-Office-Products/zgbs/office-products/ref=zg_bs_nav_0/138-0735230-1420505',

'https://www.amazon.com/Best-Sellers-Garden-Outdoor/zgbs/lawn-garden/ref=zg_bs_nav_0/138-0735230-1420505',

'https://www.amazon.com/Best-Sellers-Pet-Supplies/zgbs/pet-supplies/ref=zg_bs_nav_0/138-0735230-1420505',

'https://www.amazon.com/best-sellers-software/zgbs/software/ref=zg_bs_nav_0/138-0735230-1420505',

'https://www.amazon.com/Best-Sellers-Sports-Outdoors/zgbs/sporting-goods/ref=zg_bs_nav_0/138-0735230-1420505',

'https://www.amazon.com/Best-Sellers-Sports-Collectibles/zgbs/sports-collectibles/ref=zg_bs_nav_0/138-0735230-1420505',

'https://www.amazon.com/Best-Sellers-Home-Improvement/zgbs/hi/ref=zg_bs_nav_0/138-0735230-1420505',

'https://www.amazon.com/Best-Sellers-Toys-Games/zgbs/toys-and-games/ref=zg_bs_nav_0/138-0735230-1420505',

'https://www.amazon.com/best-sellers-video-games/zgbs/videogames/ref=zg_bs_nav_0/138-0735230-1420505'],

'title': ['Amazon Devices & Accessories',

'Amazon Launchpad',

'Appliances',

'Apps & Games',

'Arts, Crafts & Sewing',

'Audible Books & Originals',

'Automotive',

'Baby',

'Beauty & Personal Care',

'Books',

'CDs & Vinyl',

'Camera & Photo Products',

'Cell Phones & Accessories',

'Clothing, Shoes & Jewelry',

'Collectible Currencies',

'Computers & Accessories',

'Digital Educational Resources',

'Digital Music',

'Electronics',

'Entertainment Collectibles',

'Gift Cards',

'Grocery & Gourmet Food',

'Handmade Products',

'Health & Household',

'Home & Kitchen',

'Industrial & Scientific',

'Kindle Store',

'Kitchen & Dining',

'Magazine Subscriptions',

'Movies & TV',

'Musical Instruments',

'Office Products',

'Patio, Lawn & Garden',

'Pet Supplies',

'Software',

'Sports & Outdoors',

'Sports Collectibles',

'Tools & Home Improvement',

'Toys & Games',

'Video Games']}

Here we will obtain a 40 topics or categories for the Bestseller page.

You will need to repeat the step 2 for every item category obtained using the corresponding URL.

Import the “time” library, for avoiding several pages rejected by captcha and we will observe few seconds rest time between every page request by applying the function sleep from “time” library.

import time import numpy as np

Here, we developed the parse_page function, which fetches and parses each individual page URL fetched from any department of the website.

def fetch(url):

''' The function take url and headers to download and parse the page using request.get and BeautifulSoup library

it return a parent tag of typeBeautifulSoup object

Argument:

-url(string): web page url to be downloaded and parse

Return:

-doc(Beautiful 0bject): it's the parent tag containing the information that we need parsed from the page'''

HEADERS= {"User-Agent":"Mozilla/5.0 (Windows NT 10.0; Win64; x64; rv:66.0) Gecko/20100101 Firefox/66.0", "Accept-Encoding":"gzip, deflate", "Accept":"text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8", "DNT":"1","Connection":"close", "Upgrade-Insecure-Requests":"1"}

response = requests.get(url,headers= HEADERS)

if response.status_code != 200:

print("Status code:", response.status_code)

raise Exception("Failed to link to web page " + topic_url)

page_content = BeautifulSoup(response.text,"html.parser")

doc = page_content.findAll('div', attrs={'class':'a-section a-spacing-none aok-relative'})

return doc

def parse_page(table_topics,pageNo):

"""The function take all topic categories and number of page to parse for each topic as input, apply get request to download each

page, the use Beautifulsoup to parse the page. the function output are article_tags list containing all pages content, t_description

list containing correspponding topic or categories then an url list for corresponding Url.

Argument:

-table_topics(dict): dictionary containing topic description and url

-pageNo(int): number of page to parse per topic

Return:

-article_tags(list): list containing successfully parsed pages content where each index is a Beautifulsoup type

-t_description(list): list containing successfully parsed topic description

-t_url(list): list containing successfully parsed page topic url

-fail_tags(list): list containing pages url that failed first parsing

-failed_topic(list): list contaning pages topic description that failed first parsing

"""

article_tags,t_description, t_url,fail_tags,failed_topic =[],[],[],[],[]

for i in range(0,len(table_topics["url"])):

# take the url

topic_url = table_topics["url"][i]

topics_description = table_topics["title"][i]

try:

for j in range(1,pageNo+1):

ref = topic_url.find("ref")

url = topic_url[:ref]+"ref=zg_bs_pg_"+str(j)+"?_encoding=UTF8&pg="+str(j)

time.sleep(10)

#use resquest to obtain HMTL page content +str(pageNo)+

doc = fetch(url)

if len(doc)==0:

print("failed to parse page{}".format(url))

fail_tags.append(url)

failed_topic.append(topics_description)

else:

print("Sucsessfully parse:",url)

article_tags.append(doc)

t_description.append(topics_description)

t_url.append(topic_url)

except Exception as e:

print(e)

return article_tags,t_description,t_url,fail_tags,failed_topic

Here, we create the reparse failed_page_function for a second time, retrieving and parsing pages that rejected the first time. We try using just a while loop to repeat the process, and it still succeeds, but it fails even if a sleeping period is applied.

def reparse_failed_page(fail_page_url,failed_topic):

"""The function take topic categories url, and description that failed to be accessible due to captcha in the first parsing process,

try to fetch and parse thoses page for a second time.

the function return article_tags list containing all pages content, topic_description,topic_url and other pages url and topic that failed to load content again

Argument:

-fail_page_url(dict): list containing failed first parsing web page url

-failed_topic(int): list contaning failed first parsing ictionary containing topic description and url

Return:

-article_tags2(list): list containing successfully parsed pages content where each index is a Beautifulsoup type

-t_description(list): list containing successfully parsed topic description

-t_url(list): list containing successfully parsed page topic url

-fail_p(list): list containing pages url that failed again

-fail_t(list): list contaning pages topic description that failed gain

"""

print("check if there is any failed pages,then print number:",len(fail_page_url))

article_tag2, topic_url, topic_d, fail_p, fail_t = [],[],[],[],[]

try:

for i in range(len(fail_page_url)):

time.sleep(20)

doc = fetch(url)

if len(doc)==0:

print("page{}failed again".format(fail_page_url[i]))

fail_p.append(fail_page_url[i])

fail_t.append(failed_topic[i])

else:

article_tag2.append(doc)

topic_url.append(fail_page_url[i])

topic_d.append(failed_topic[i])

except Exception as e:

print(e)

return article_tag2,topic_d,topic_url,fail_p,fail_t

Here we define the parse function, which will perform 2 layers of parsing to obtain the maximum amount of pages.

def parse(table_topics,pageNo):

"""The function take table_topics, and number of page to parse for ecah topic url,the main purpose of this funtion is

to realize a double attempt to parse maximum number of pages it can .It's a combination of result getting from first

and second parse.

Argument

-table_topics(dict): dictionary containing topic description and url

-pageNo(int): number of page to parse per topic

Return:

-all_arcticle_tag(list): list containing all successfully parsed pages content where each index is a Beautifulsoup type

-all_topics_description(list): list containing all successfully parsed topic description

-all_topics_url(list): list containing all successfully parsed page topic url

"""

article_tags,t_description,t_url,fail_tags,failed_topic = parse_page(table_topics,pageNo)

if len(fail_tags)!=0:

article_tags2,t_description2,t_url2,fail_tags2,failed_topic2 = reparse_failed_page(fail_tags,failed_topic)

all_arcticle_tag = [*article_tags,*article_tags2]

all_topics_description = [*t_description,*t_description2]

all_topics_url = [*t_url,*t_url2]

#return all_arcticle_tag,all_topics_description,all_topics_url

else:

print("successfully parsed all pages")

all_arcticle_tag = article_tags

all_topics_description =t_description

all_topics_url = t_url

# return article_tags,t_description,t_url,fail_tags,failed_topic

return all_arcticle_tag,all_topics_description,all_topics_url

all_arcticle_tag,all_topics_description,all_topics_url = parse(table_topics,2)

Sucsessfully parse: https://www.amazon.com/Best-Sellers/zgbs/amazon-devices/ref=zg_bs_pg_1?_encoding=UTF8&pg=1

Sucsessfully parse: https://www.amazon.com/Best-Sellers/zgbs/amazon-devices/ref=zg_bs_pg_2?_encoding=UTF8&pg=2

Sucsessfully parse: https://www.amazon.com/Best-Sellers-Amazon-Launchpad/zgbs/boost/ref=zg_bs_pg_1?_encoding=UTF8&pg=1

Sucsessfully parse: https://www.amazon.com/Best-Sellers-Amazon-Launchpad/zgbs/boost/ref=zg_bs_pg_2?_encoding=UTF8&pg=2

Sucsessfully parse: https://www.amazon.com/Best-Sellers-Appliances/zgbs/appliances/ref=zg_bs_pg_1?_encoding=UTF8&pg=1

Sucsessfully parse: https://www.amazon.com/Best-Sellers-Appliances/zgbs/appliances/ref=zg_bs_pg_2?_encoding=UTF8&pg=2

Sucsessfully parse: https://www.amazon.com/Best-Sellers-Appstore-Android/zgbs/mobile-apps/ref=zg_bs_pg_1?_encoding=UTF8&pg=1

Sucsessfully parse: https://www.amazon.com/Best-Sellers-Appstore-Android/zgbs/mobile-apps/ref=zg_bs_pg_2?_encoding=UTF8&pg=2

Sucsessfully parse: https://www.amazon.com/Best-Sellers-Arts-Crafts-Sewing/zgbs/arts-crafts/ref=zg_bs_pg_1?_encoding=UTF8&pg=1

Sucsessfully parse: https://www.amazon.com/Best-Sellers-Arts-Crafts-Sewing/zgbs/arts-crafts/ref=zg_bs_pg_2?_encoding=UTF8&pg=2

Sucsessfully parse: https://www.amazon.com/Best-Sellers-Audible-Audiobooks/zgbs/audible/ref=zg_bs_pg_1?_encoding=UTF8&pg=1

Sucsessfully parse: https://www.amazon.com/Best-Sellers-Audible-Audiobooks/zgbs/audible/ref=zg_bs_pg_2?_encoding=UTF8&pg=2

Sucsessfully parse: https://www.amazon.com/Best-Sellers-Automotive/zgbs/automotive/ref=zg_bs_pg_1?_encoding=UTF8&pg=1

Sucsessfully parse: https://www.amazon.com/Best-Sellers-Automotive/zgbs/automotive/ref=zg_bs_pg_2?_encoding=UTF8&pg=2

Sucsessfully parse: https://www.amazon.com/Best-Sellers-Baby/zgbs/baby-products/ref=zg_bs_pg_1?_encoding=UTF8&pg=1

Sucsessfully parse: https://www.amazon.com/Best-Sellers-Baby/zgbs/baby-products/ref=zg_bs_pg_2?_encoding=UTF8&pg=2

Sucsessfully parse: https://www.amazon.com/Best-Sellers-Beauty/zgbs/beauty/ref=zg_bs_pg_1?_encoding=UTF8&pg=1

Sucsessfully parse: https://www.amazon.com/Best-Sellers-Beauty/zgbs/beauty/ref=zg_bs_pg_2?_encoding=UTF8&pg=2

Sucsessfully parse: https://www.amazon.com/best-sellers-books-Amazon/zgbs/books/ref=zg_bs_pg_1?_encoding=UTF8&pg=1

Sucsessfully parse: https://www.amazon.com/best-sellers-books-Amazon/zgbs/books/ref=zg_bs_pg_2?_encoding=UTF8&pg=2

Sucsessfully parse: https://www.amazon.com/best-sellers-music-albums/zgbs/music/ref=zg_bs_pg_1?_encoding=UTF8&pg=1

Sucsessfully parse: https://www.amazon.com/best-sellers-music-albums/zgbs/music/ref=zg_bs_pg_2?_encoding=UTF8&pg=2

Sucsessfully parse: https://www.amazon.com/best-sellers-camera-photo/zgbs/photo/ref=zg_bs_pg_1?_encoding=UTF8&pg=1

Sucsessfully parse: https://www.amazon.com/best-sellers-camera-photo/zgbs/photo/ref=zg_bs_pg_2?_encoding=UTF8&pg=2

Sucsessfully parse: https://www.amazon.com/Best-Sellers/zgbs/wireless/ref=zg_bs_pg_1?_encoding=UTF8&pg=1 failed to parse pagehttps://www.amazon.com/Best-Sellers/zgbs/wireless/ref=zg_bs_pg_2?_encoding=UTF8&pg=2

Sucsessfully parse: https://www.amazon.com/Best-Sellers/zgbs/fashion/ref=zg_bs_pg_1?_encoding=UTF8&pg=1

Sucsessfully parse: https://www.amazon.com/Best-Sellers/zgbs/fashion/ref=zg_bs_pg_2?_encoding=UTF8&pg=2

Sucsessfully parse: https://www.amazon.com/Best-Sellers-Collectible-Coins/zgbs/coins/ref=zg_bs_pg_1?_encoding=UTF8&pg=1 failed to parse pagehttps://www.amazon.com/Best-Sellers-Collectible-Coins/zgbs/coins/ref=zg_bs_pg_2?_encoding=UTF8&pg=2

Sucsessfully parse: https://www.amazon.com/Best-Sellers-Computers-Accessories/zgbs/pc/ref=zg_bs_pg_1?_encoding=UTF8&pg=1

Sucsessfully parse: https://www.amazon.com/Best-Sellers-Computers-Accessories/zgbs/pc/ref=zg_bs_pg_2?_encoding=UTF8&pg=2

Sucsessfully parse: https://www.amazon.com/Best-Sellers/zgbs/digital-educational-resources/ref=zg_bs_pg_1?_encoding=UTF8&pg=1

Sucsessfully parse: https://www.amazon.com/Best-Sellers/zgbs/digital-educational-resources/ref=zg_bs_pg_2?_encoding=UTF8&pg=2 failed to parse pagehttps://www.amazon.com/Best-Sellers-MP3-Downloads/zgbs/dmusic/ref=zg_bs_pg_1?_encoding=UTF8&pg=1 failed to parse pagehttps://www.amazon.com/Best-Sellers-MP3-Downloads/zgbs/dmusic/ref=zg_bs_pg_2?_encoding=UTF8&pg=2

Sucsessfully parse: https://www.amazon.com/Best-Sellers-Electronics/zgbs/electronics/ref=zg_bs_pg_1?_encoding=UTF8&pg=1

Sucsessfully parse: https://www.amazon.com/Best-Sellers-Electronics/zgbs/electronics/ref=zg_bs_pg_2?_encoding=UTF8&pg=2

Sucsessfully parse: https://www.amazon.com/Best-Sellers-Entertainment-Collectibles/zgbs/entertainment-collectibles/ref=zg_bs_pg_1?_encoding=UTF8&pg=1

Sucsessfully parse: https://www.amazon.com/Best-Sellers-Entertainment-Collectibles/zgbs/entertainment-collectibles/ref=zg_bs_pg_2?_encoding=UTF8&pg=2

Sucsessfully parse: https://www.amazon.com/Best-Sellers-Gift-Cards/zgbs/gift-cards/ref=zg_bs_pg_1?_encoding=UTF8&pg=1

Sucsessfully parse: https://www.amazon.com/Best-Sellers-Gift-Cards/zgbs/gift-cards/ref=zg_bs_pg_2?_encoding=UTF8&pg=2

Sucsessfully parse: https://www.amazon.com/Best-Sellers-Grocery-Gourmet-Food/zgbs/grocery/ref=zg_bs_pg_1?_encoding=UTF8&pg=1

Sucsessfully parse: https://www.amazon.com/Best-Sellers-Grocery-Gourmet-Food/zgbs/grocery/ref=zg_bs_pg_2?_encoding=UTF8&pg=2

Sucsessfully parse: https://www.amazon.com/Best-Sellers-Handmade/zgbs/handmade/ref=zg_bs_pg_1?_encoding=UTF8&pg=1

Sucsessfully parse: https://www.amazon.com/Best-Sellers-Handmade/zgbs/handmade/ref=zg_bs_pg_2?_encoding=UTF8&pg=2

Sucsessfully parse: https://www.amazon.com/Best-Sellers-Health-Personal-Care/zgbs/hpc/ref=zg_bs_pg_1?_encoding=UTF8&pg=1

Sucsessfully parse: https://www.amazon.com/Best-Sellers-Health-Personal-Care/zgbs/hpc/ref=zg_bs_pg_2?_encoding=UTF8&pg=2

Sucsessfully parse: https://www.amazon.com/Best-Sellers-Home-Kitchen/zgbs/home-garden/ref=zg_bs_pg_1?_encoding=UTF8&pg=1

Sucsessfully parse: https://www.amazon.com/Best-Sellers-Home-Kitchen/zgbs/home-garden/ref=zg_bs_pg_2?_encoding=UTF8&pg=2

Sucsessfully parse: https://www.amazon.com/Best-Sellers-Industrial-Scientific/zgbs/industrial/ref=zg_bs_pg_1?_encoding=UTF8&pg=1

Sucsessfully parse: https://www.amazon.com/Best-Sellers-Industrial-Scientific/zgbs/industrial/ref=zg_bs_pg_2?_encoding=UTF8&pg=2

Sucsessfully parse: https://www.amazon.com/Best-Sellers-Kindle-Store/zgbs/digital-text/ref=zg_bs_pg_1?_encoding=UTF8&pg=1

Sucsessfully parse: https://www.amazon.com/Best-Sellers-Kindle-Store/zgbs/digital-text/ref=zg_bs_pg_2?_encoding=UTF8&pg=2

Sucsessfully parse: https://www.amazon.com/Best-Sellers-Kitchen-Dining/zgbs/kitchen/ref=zg_bs_pg_1?_encoding=UTF8&pg=1

Sucsessfully parse: https://www.amazon.com/Best-Sellers-Kitchen-Dining/zgbs/kitchen/ref=zg_bs_pg_2?_encoding=UTF8&pg=2

Sucsessfully parse: https://www.amazon.com/Best-Sellers-Magazines/zgbs/magazines/ref=zg_bs_pg_1?_encoding=UTF8&pg=1

Sucsessfully parse: https://www.amazon.com/Best-Sellers-Magazines/zgbs/magazines/ref=zg_bs_pg_2?_encoding=UTF8&pg=2

Sucsessfully parse: https://www.amazon.com/best-sellers-movies-TV-DVD-Blu-ray/zgbs/movies-tv/ref=zg_bs_pg_1?_encoding=UTF8&pg=1

Sucsessfully parse: https://www.amazon.com/best-sellers-movies-TV-DVD-Blu-ray/zgbs/movies-tv/ref=zg_bs_pg_2?_encoding=UTF8&pg=2

Sucsessfully parse: https://www.amazon.com/Best-Sellers-Musical-Instruments/zgbs/musical-instruments/ref=zg_bs_pg_1?_encoding=UTF8&pg=1

Sucsessfully parse: https://www.amazon.com/Best-Sellers-Musical-Instruments/zgbs/musical-instruments/ref=zg_bs_pg_2?_encoding=UTF8&pg=2

Sucsessfully parse: https://www.amazon.com/Best-Sellers-Office-Products/zgbs/office-products/ref=zg_bs_pg_1?_encoding=UTF8&pg=1

Sucsessfully parse: https://www.amazon.com/Best-Sellers-Office-Products/zgbs/office-products/ref=zg_bs_pg_2?_encoding=UTF8&pg=2

Sucsessfully parse: https://www.amazon.com/Best-Sellers-Garden-Outdoor/zgbs/lawn-garden/ref=zg_bs_pg_1?_encoding=UTF8&pg=1

Sucsessfully parse: https://www.amazon.com/Best-Sellers-Garden-Outdoor/zgbs/lawn-garden/ref=zg_bs_pg_2?_encoding=UTF8&pg=2

Sucsessfully parse: https://www.amazon.com/Best-Sellers-Pet-Supplies/zgbs/pet-supplies/ref=zg_bs_pg_1?_encoding=UTF8&pg=1

Sucsessfully parse: https://www.amazon.com/Best-Sellers-Pet-Supplies/zgbs/pet-supplies/ref=zg_bs_pg_2?_encoding=UTF8&pg=2

Sucsessfully parse: https://www.amazon.com/best-sellers-software/zgbs/software/ref=zg_bs_pg_1?_encoding=UTF8&pg=1

Sucsessfully parse: https://www.amazon.com/best-sellers-software/zgbs/software/ref=zg_bs_pg_2?_encoding=UTF8&pg=2

Sucsessfully parse: https://www.amazon.com/Best-Sellers-Sports-Outdoors/zgbs/sporting-goods/ref=zg_bs_pg_1?_encoding=UTF8&pg=1

Sucsessfully parse: https://www.amazon.com/Best-Sellers-Sports-Outdoors/zgbs/sporting-goods/ref=zg_bs_pg_2?_encoding=UTF8&pg=2

Sucsessfully parse: https://www.amazon.com/Best-Sellers-Sports-Collectibles/zgbs/sports-collectibles/ref=zg_bs_pg_1?_encoding=UTF8&pg=1

Sucsessfully parse: https://www.amazon.com/Best-Sellers-Sports-Collectibles/zgbs/sports-collectibles/ref=zg_bs_pg_2?_encoding=UTF8&pg=2

Sucsessfully parse: https://www.amazon.com/Best-Sellers-Home-Improvement/zgbs/hi/ref=zg_bs_pg_1?_encoding=UTF8&pg=1

Sucsessfully parse: https://www.amazon.com/Best-Sellers-Home-Improvement/zgbs/hi/ref=zg_bs_pg_2?_encoding=UTF8&pg=2

Sucsessfully parse: https://www.amazon.com/Best-Sellers-Toys-Games/zgbs/toys-and-games/ref=zg_bs_pg_1?_encoding=UTF8&pg=1

Sucsessfully parse: https://www.amazon.com/Best-Sellers-Toys-Games/zgbs/toys-and-games/ref=zg_bs_pg_2?_encoding=UTF8&pg=2

Sucsessfully parse: https://www.amazon.com/best-sellers-video-games/zgbs/videogames/ref=zg_bs_pg_1?_encoding=UTF8&pg=1

Sucsessfully parse: https://www.amazon.com/best-sellers-video-games/zgbs/videogames/ref=zg_bs_pg_2?_encoding=UTF8&pg=2 check if there is any failed pages,then print number: 4 pagehttps://www.amazon.com/Best-Sellers/zgbs/wireless/ref=zg_bs_pg_2?_encoding=UTF8&pg=2failed again pagehttps://www.amazon.com/Best-Sellers-Collectible-Coins/zgbs/coins/ref=zg_bs_pg_2?_encoding=UTF8&pg=2failed again pagehttps://www.amazon.com/Best-Sellers-MP3-Downloads/zgbs/dmusic/ref=zg_bs_pg_1?_encoding=UTF8&pg=1failed again pagehttps://www.amazon.com/Best-Sellers-MP3-Downloads/zgbs/dmusic/ref=zg_bs_pg_2?_encoding=UTF8&pg=2failed again

Let's check on the number of pages we successfully parse out of 80 pages

len(all_arcticle_tag)

76

Scrape Data from Every Page

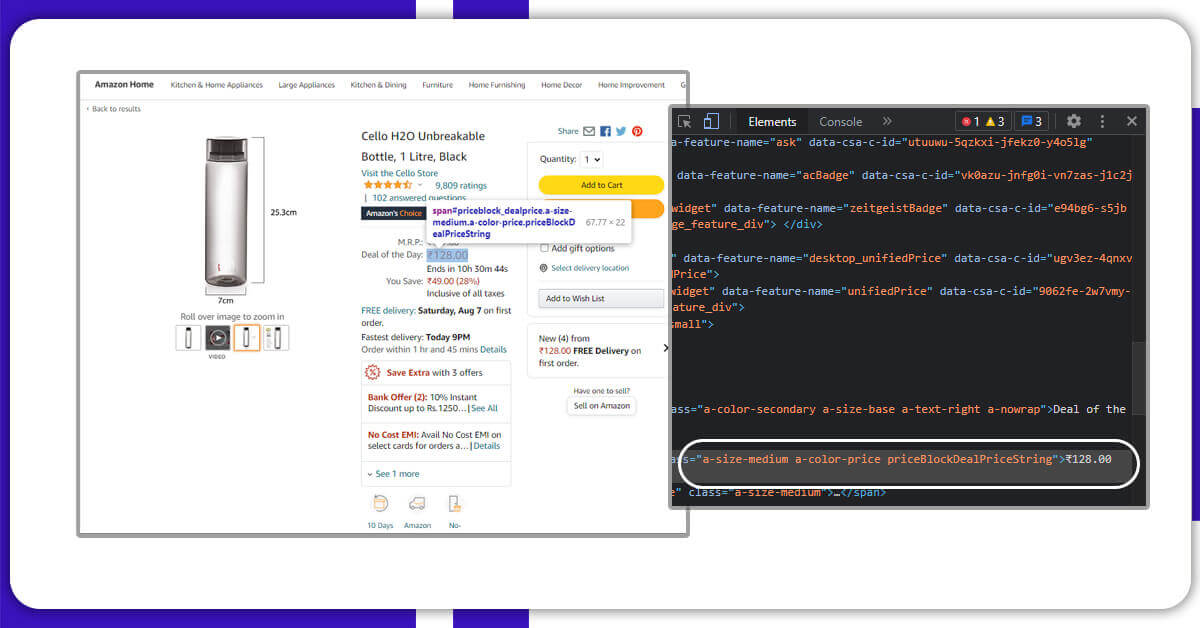

We built a function to obtain information from the page such as the product details, rating, maximum price, minimum price, review, and image URL. To get all attribute tags, open the site, right-click on the area you want to retrieve tags for, and then examine the page. The image below shows an example of how to find information on item price tags.

To extract the corresponding product details, we created the get topic_url _item description method here.

def get_topic_url_item_description(doc,topic_description,topic_url):

"""The funtion takes a parent tag attribute, topic description and topic url as input, after finding the item name tags,

the function return the item name(description), his corresponding topic(category) and his category url

Argument:

-doc(BeautifulSoup element): parents tag

-topic_description(string): topic name or category

-topic_url(string): topic url

Return:

-item_description(string): item name

-topic_description(string):corresponding topic

- topic_url(string): corresponding topic url"""

name = doc.find("span", attrs={'class':'zg-text-center-align'})

try:

item_description = name.find_all('img', alt=True)[0]["alt"]

except:

item_description = ''

get_item_price is used to obtain the associated item's minimum and maximum rate.

def get_item_price(d):

"""The function take a parent tag attribute as input and find for corresponding child tag(item price),

then return maximum price and minimum price for corresponding item and 0 when no price is found

Argument:

-d(BeautifulSoup element): parent tag

Return:

-min_price(float): item minimum price

-max_price(float): item maximum price

"""

p = d.find("span",attrs={"class":"a-size-base a-color-price"})

try :

if "-" in p.text :

min_price = float(((p.text).split("-")[0]).replace("$",""))

max_price = float((((p.text).split("-")[1]).replace(",","")).replace("$",""))

else :

min_price = float(((p.text[:5]).replace(",","")).strip().replace("$",""))

max_price = 0.0

except:

min_price = 0.0

max_price = 0.0

return min_price,max_price

To extract the matching item rate and customer review, we defined the get item rate and get item review functions.

def get_item_rate(d):

"""The function take a parent tag attribute as input and find for corresponding child tag(rate),

then return item rating out of 5, and 0.0 when can't find a rate

Argument:

-d(BeautifulSoup element): parent tag

Return:

-rating(float): item rating out or 5

"""

rate = d.find("span",attrs={"class":"a-icon-alt"})

try :

rating = float(rate.text[:3])

except:

rating = 0.0

return rating

def get_item_review(d):

"""The function take a parent tag attribute as input and find for corresponding child tag(costumers review),

then return item review, and 0 when can't find number of review

Argument:

-d(BeautifulSoup element): parent tag

Return:

-review(float): item costumer review

"""

review = d.find("a",attrs ={"class":"a-size-small a-link-normal"})

try :

review = int((review.text).replace(",",""))

except:

review = 0

return review

The get_item_url function is used to obtain the relevant item picture URL.

def get_item_url(d):

"""The function take a parent tag attribute as input and find for corresponding child tag(image),

then return item image url, and 'no image' if can't find an image

-d(BeautifulSoup element): parent tag

Return:

-img(float): item image url

"""

image = d.findAll("img", src = True)

try:

img = image[0]["src"]

except:

img = 'No image'

return img

To make data analytic easier, item information data is extracted and stored directly in a usable data type.

String data types are used for item description and image URL, float data types are used for item price and rating, and integer data types are used for customer reviews.

In a Python Database, integrate the relevant information taken from each page.

We developed the get info function to collect all item information data as a list of data and put it in a dictionary.

def get_info(article_tags,t_description,t_url):

"""The function take a list of pages content which each index is a Beautiful element that will be use to find parent tag,list of topic description and topic url then

the return a dictionary made of list of each item information data such as: his corresponding topic, the topic url,

the item description, minimum price(maximum price if exist), item rating, costumer review, and item image url

Argument:

-article_tags(list): list containing all pages content where each index is a Beautifulsoup type

-t_description(list): list containing topic description

-t_url(list): list containing topic url

Return:

-dictionary(dict): dictionary containing all item information data taken from each parse page topic

"""

topic_description, topics_url, item, item_url = [],[],[],[]

minimum_price, maximum_price, rating, costuomer_review = [],[],[],[]

for idx in range(0,len(article_tags)):

doc = article_tags[idx]#.findAll('div', attrs={'class':'a-section a-spacing-none aok-relative'})

for d in doc :

names,topic_name,topic_url = get_topic_url_item_description(d,t_description[idx],t_url[idx])

min_price,max_price = get_item_price(d)

rate = get_item_rate(d)

review = get_item_review(d)

url = get_item_url(d)

####put each item data inside corresponding list

item.append(names)

topic_description.append(topic_name)

topics_url.append(topic_url)

minimum_price.append(min_price)

maximum_price.append(max_price)

rating.append(rate)

costuomer_review.append(review)

item_url.append(url)

return {

"Topic": topic_description,

"Topic_url": topics_url,

"Item_description": item,

"Rating out of 5": rating,

"Minimum_price": minimum_price,

"Maximum_price": maximum_price,

"Review" :costuomer_review,

"Item Url" : item_url}

data = get_info(all_arcticle_tag,all_topics_description,all_topics_url)

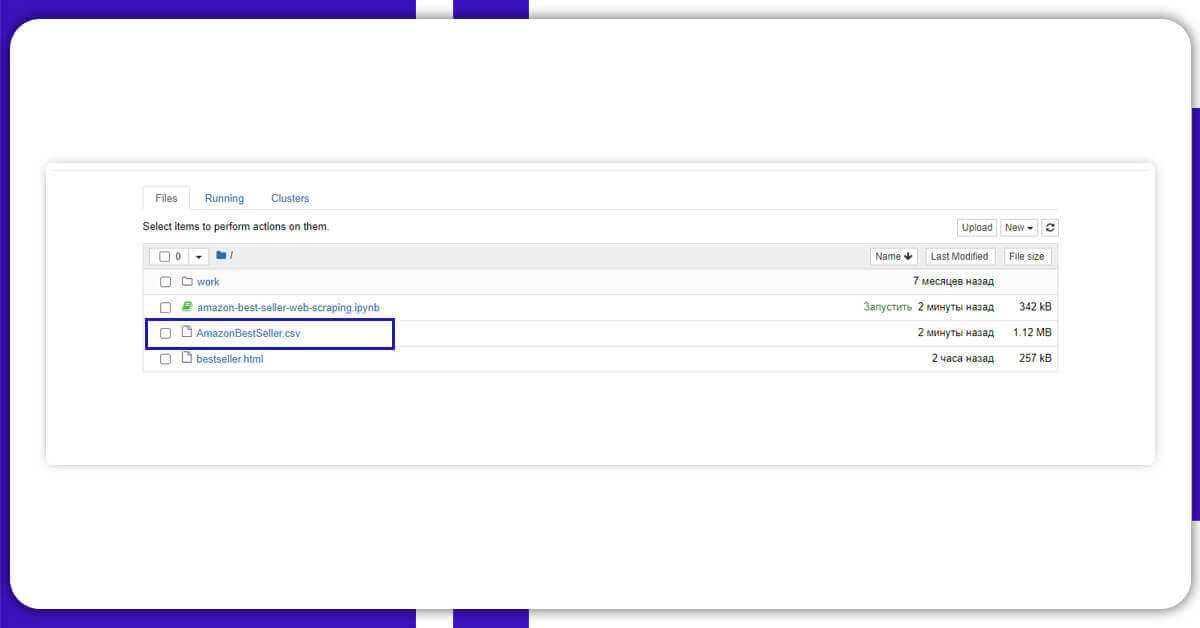

You will get the maximum number of pages where you will collect data information after 2 parsing attempts, and now you must save it in a Dataframe using the Pandas library.

Save the data to CSV file using Pandas library

Let us save the retrieved data to a pandas DataFrame

dataframe = pd.DataFrame(data)

Let us print and check the result and the data length that includes number of rows, number of columns.

dataframe = pd.DataFrame(data)

| 0 | Amazon Devices & Accessories | https://www.amazon.com/Best-Sellers/zgbs/amazo... | Fire TV Stick 4K streaming device with Alexa V... | 4.7 | 39.90 | 0.00 | 615786 | https://images-na.ssl-images-amazon.com/images... |

| 1 | Amazon Devices & Accessories | https://www.amazon.com/Best-Sellers/zgbs/amazo... | Fire TV Stick (3rd Gen) with Alexa Voice Remot... | 4.7 | 39.90 | 0.00 | 1884 | https://images-na.ssl-images-amazon.com/images... |

| 2 | Amazon Devices & Accessories | https://www.amazon.com/Best-Sellers/zgbs/amazo... | Echo Dot (3rd Gen) - Smart speaker with Alexa ... | 4.7 | 39.90 | 0.00 | 1124438 | https://images-na.ssl-images-amazon.com/images... |

| 3 | Amazon Devices & Accessories | https://www.amazon.com/Best-Sellers/zgbs/amazo... | Fire TV Stick Lite with Alexa Voice Remote Lit... | 4.7 | 29.90 | 0.00 | 151044 | https://images-na.ssl-images-amazon.com/images... |

| 4 | Amazon Devices & Accessories | https://www.amazon.com/Best-Sellers/zgbs/amazo... | Amazon Smart Plug, works with Alexa – A Certif... | 4.7 | 24.90 | 0.00 | 425111 | https://images-na.ssl-images-amazon.com/images... |

| 3795 | Video Games | https://www.amazon.com/best-sellers-video-game... | PowerA Joy Con Comfort Grips for Nintendo Swit... | 4.7 | 9.88 | 0.00 | 21960 | https://images-na.ssl-images-amazon.com/images... |

| 3796 | Amazon Devices & Accessories | https://www.amazon.com/Best-Sellers/zgbs/amazo... | Super Mario 3D All-Stars (Nintendo Switch) | 4.7 | 58.00 | 0.00 | 15109 | https://images-na.ssl-images-amazon.com/images... |

| 3799 | Amazon Devices & Accessories | https://www.amazon.com/Best-Sellers/zgbs/amazo... | NBA 2K21: 15,000 VC - PS4 [Digital Code] | 4.5 | 4.99 | 99.99 | 454 | https://images-na.ssl-images-amazon.com/images... |

3800 rows × 8 columns

We have an 8-column DataFrame with over 3500 rows of data. You will need to use pandas to save the DataFrame into a CSV file.

dataframe.to_csv('AmazonBestSeller.csv', index=None)

The CSV File will be developed by clicking on FILE>OPEN

Now open, the CSV file read lines, and print the first 5 lines of the code.

with open("AmazonBestSeller.csv","r") as f:

data = f.readlines()

data[:5]

['Topic,Topic_url,Item_description,Rating out of 5,Minimum_price,Maximum_price,Review,Item Url\n',

'Amazon Devices & Accessories,https://www.amazon.com/Best-Sellers/zgbs/amazon-devices/ref=zg_bs_nav_0/138-0735230-1420505,Fire TV Stick 4K streaming device with Alexa Voice Remote | Dolby Vision | 2018 release,4.7,39.9,0.0,615786,"https://images-na.ssl-images-amazon.com/images/I/51CgKGfMelL._AC_UL200_SR200,200_.jpg"\n',

'Amazon Devices & Accessories,https://www.amazon.com/Best-Sellers/zgbs/amazon-devices/ref=zg_bs_nav_0/138-0735230-1420505,Fire TV Stick (3rd Gen) with Alexa Voice Remote (includes TV controls) | HD streaming device | 2021 release,4.7,39.9,0.0,1884,"https://images-na.ssl-images-amazon.com/images/I/51KKR5uGn6L._AC_UL200_SR200,200_.jpg"\n',

'Amazon Devices & Accessories,https://www.amazon.com/Best-Sellers/zgbs/amazon-devices/ref=zg_bs_nav_0/138-0735230-1420505,Echo Dot (3rd Gen) - Smart speaker with Alexa - Charcoal,4.7,39.9,0.0,1124438,"https://images-na.ssl-images-amazon.com/images/I/6182S7MYC2L._AC_UL200_SR200,200_.jpg"\n',

'Amazon Devices & Accessories,https://www.amazon.com/Best-Sellers/zgbs/amazon-devices/ref=zg_bs_nav_0/138-0735230-1420505,Fire TV Stick Lite with Alexa Voice Remote Lite (no TV controls) | HD streaming device | 2020 release,4.7,29.9,0.0,151044,"https://images-na.ssl-images-amazon.com/images/I/51Da2Z%2BFTFL._AC_UL200_SR200,200_.jpg"\n']

Let's start with a basic preprocessing step: find the item with the most customer reviews.

import numpy as np

max_r = np.max(dataframe["review"])

for i in range(len(dataframe)):

if dataframe["review"][i] == max_r:

print("The item with the highest number of review is {}, with {} review".format(dataframe["item_description"][i],dataframe["review"][i]))

The item with the highest number of review is Hudson Baby Unisex-Baby Cozy Fleece Booties, with 41555 review

Steps That We Had Performed

- Import and install libraries

- o acquire the item categories and topics URL, retrieve and analyze the Bestseller Web page source code using request and Beautifulsoup.

- tep 2 should be repeated for each item topic that was received using the relevant URL.

- ach page should have information extracted.

- ombine the data you've gathered. In a Python Dictionaries, extract information from each page's data.

- fter completion, save the data to CSV format by using Panda Libraries.

On completion of the project, here you will find CSV file in the below format:

Topic,Topic_url,Item_description,Rating out of 5,Minimum_price,Maximum_price,Review,Item Url Amazon Devices & Accessories, https://www.amazon.com/Best-Sellers/zgbs/amazon-devices/ref=zg_bs_nav_0/131-6756172-7735956,Fire TV Stick 4K streaming device with Alexa Voice Remote | Dolby Vision | 2018 release,4.7,39.9,0.0,615699, "https://images-na.ssl-images-amazon.com/images/I/51CgKGfMelL._AC_UL200_SR200,200_.jpg" Amazon Devices & Accessories, https://www.amazon.com/Best-Sellers/zgbs/amazon-devices/ref=zg_bs_nav_0/131-6756172-7735956,Fire TV Stick (3rd Gen) with Alexa Voice Remote (includes TV controls) | HD streaming device | 2021 release,4.7,39.9,0.0,1844, "https://images-na.ssl-images-amazon.com/images/I/51KKR5uGn6L._AC_UL200_SR200,200_.jpg" Amazon Devices & Accessories ,https://www.amazon.com/Best-Sellers/zgbs/amazon-devices/ref=zg_bs_nav_0/131-6756172-7735956,"Amazon Smart Plug, works with Alexa – A Certified for Humans Device",4.7,24.9,0.0,425090, "https://images-na.ssl-images-amazon.com/images/I/41uF7hO8FtL._AC_UL200_SR200,200_.jpg" Amazon Devices & Accessories ,https://www.amazon.com/Best-Sellers/zgbs/amazon-devices/ref=zg_bs_nav_0/131-6756172-7735956,Fire TV Stick Lite with Alexa Voice Remote Lite (no TV controls) | HD streaming device | 2020 release,4.7,29.9,0.0,151007, "https://images-na.ssl-images-amazon.com/images/I/51Da2Z%2BFTFL._AC_UL200_SR200,200_.jpg"

For any further queries, please contact iWEb Scraping Services.