How to Analyse Amazon Reviews for Climbing Shoe Analysis using Python?

Those who are familiar with the sport recognise the significance of wearing a pair of rock-climbing shoes that fit well. Shoes that don't fit well can be disastrous in a variety of ways. Because it's a fringe sport, finding a pair that fits properly isn't as simple as stepping into a store and trying them on. Climbing shoe stores may be far and few between depending on one's place of residence. As a result, many people opt to buy it online.

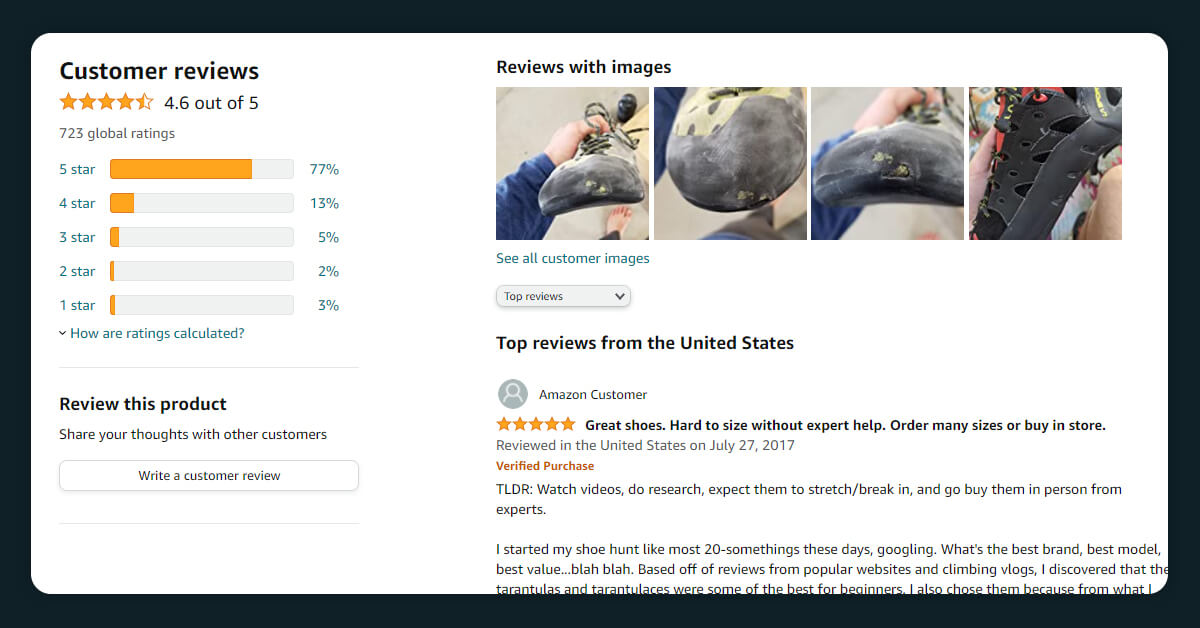

On the other hand, buying a pair, is no easy task, and scrolling through hundreds of reviews is bound to irritate even the most methodical among us. As a result, in this blog, we have scraped various shoe products available on Amazon for detailed study.

Web Scraping Amazon Reviews Dataset

Here, we will use Selenium library in Python and divide the web scraping services in various parts:

Part 1: Fetching Product URLs

The first-step will be to initialize the webdriver. For this we will use Google webdriver which can be downloaded using this link. The below code is used for starting chrome web browser.

from selenium import webdriver driver_path =driver = webdriver.Chrome(executable_path=driver_path)

Then we use get() method to get access to Amazon website. For avoiding simulation of entering search queries and keystrokes, simply attach search query to the base URL. The webpage coming from the user search will have the following format: https://www.amazon.com/ < query will be placed here>

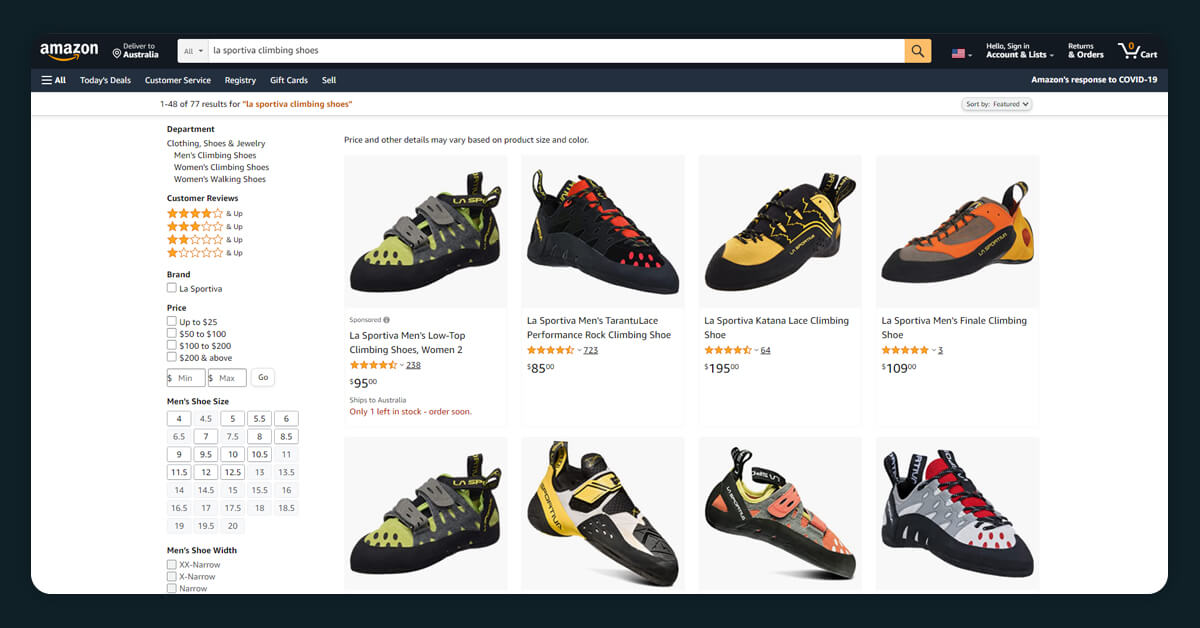

Executing the below script will open the Amazon search result page for search query ‘la sportive climbing shoe’

base_url = 'https://www.amazon.com/' query = 'la sportiva climbing shoe' query_url = base_url+'s?k='+query driver.get(query_url)

The next stage would be to scrape all of the URLs on the page and save them to a file before moving on to the next page of search results. This process continues until you reach the final results page. For this approach, I write a few utility functions.

A string is written to a file using this function. The “a” option determines whether to create a new file (if one has not yet been created) or to publish to an existing file.

def write_to_file(filename,content): f = open(filename, "a") f.write(content+'\n') f.close()

This method scrapes the entire current page for all URLs. If the words "climbing" and "shoe" appear in the URL, it writes the URLs to a file links.txt (using the write to file utility function). To avoid the Python NoneType issue that occurs when Selenium picks up NoneType objects, an additional condition is introduced to evaluate only strings (type(link) == str).

def get_all_urls():

# identify elements with tagname

links = driver.find_elements_by_tag_name("a")

# traverse list

for link in links:

# get_attribute() to get all href

link = link.get_attribute('href')

if (type(link) == str) and ('climbing' in link) and ('shoe' in link):

write_to_file('links.txt',link)

To put it all together, we begin by calculating the number of results pages. The class “a-normal” is used to represent integers in the DOM Tree. Here, you will get all numbers (in this case 1 to 5) using the find elements by class name method, then set the last number to the variable last page (.text extracts the actual text value which is typecast to an int variable).

pages = driver.find_elements_by_class_name("a-normal")

last_page = int(pages[-1].text)

We scrape the URLs by cycling over the results pages after putting the previous two lines into a function. The base URL was combined with both the search query and page number in Amazon's URL structure for each incrementing page number: https://www.amazon.com/s?k=query&page=page number. If the results just had one webpage, this exception handler was created (pages would evaluate to an empty list). Other major climbing shoe brands that went through this process were Ocun, Five Ten, Tenaya, Black Diamond, Scarpa, Mad Rock, Evolv, and Climb X.

def iterate_pages():

pages = driver.find_elements_by_class_name("a-normal")

try:

last_page = int(pages[-1].text)

# base_url would evaluate to ...amazon.com/s?k=

base_url = driver.current_url

for page in range(2,last_page+1):

get_all_urls()

driver.get(base_url+'&page='+str(page))

except IndexError:

get_all_urls()

The initial scraping pass yielded 2655 URLs. Filtering away undesirable URLs, however, required more cleaning. Examine the following list of nefarious URLs:

https://www.amazon.com/gp/slredirect/picassoRedirect.html/ref=pa_sp_mtf_aps_sr_pg1_1?ie=UTF8&adId=A0187488H7LAC5K25U1L&url=%2FTenaya-Oasi-Climbing-Shoe-Womens%2Fdp%2FB00G2G1U5U%2Fref%3Dsr_1_14_sspa%3Fdchild%3D1%26keywords%3Dtenaya%2Bclimbing%2Bshoe%26qid%3D1618629457%26sr%3D8-14-spons%26psc%3D1&qualifier=1618629457&id=3206048678566111&widgetName=sp_mtf https://www.amazon.com/s?k=five+ten+climbing+shoe# https://www.amazon.com/s?k=mad+rock+climbing+shoe#s-skipLinkTargetForFilterOptions https://www.amazon.com/s?k=mad+rock+climbing+shoe#s-skipLinkTargetForMainSearchResults https://aax-us-east.amazon-adsystem.com/x/c/QmbTjAA2xKm5MsGgE8COatYAAAF43dZjkQEAAAH2AQqX8gE/https://www.amazon.com/stores/page/5F070E5E-577B-4B70-956A-02731ECF176A?store_ref=SB_A0421652LWRFVMVX8W4I&pd_rd_w=fsNZy&pf_rd_p=eec71719-2cb3-403b-a8de-e6266852cdb6&pd_rd_wg=uFHFp&pf_rd_r=5E5ZWZNV7GCVDNKR9EC7&pd_rd_r=a8ad5a25-3cd0-4a36-a529-3000b5271936&aaxitk=4VreBt7wvxz.JYuyg-2dbQ&hsa_cr_id=7601608740401&lp_asins=B07BJZTWGR,B07BKRBH7B,B07FQ5CYTK&lp_query=mad%20rock%20climbing%20shoe&lp_slot=desktop-hsa-3psl&ref_=sbx_be_s_3psl_mbd

The first URL is a link to a similar product that is essentially an advertisement (these would refer to the products that have the sponsored tag on the results page). The second, third, and fourth URLs all lead to the search result, not a specific product page. The final URL is an advertisement URL as well.

Let's see how this compares to URLs that lead to individual product pages.

https://www.amazon.com/Mad-Rock-Lotus-Climbing-Shoes/dp/B00L8BT7P4/ref=sr_1_15?dchild=1&keywords=mad%2Brock%2Bclimbing%2Bshoe&qid=1618629518&sr=8-15&th=1 https://www.amazon.com/SCARPA-Instinct-SR-Climbing-Shoe/dp/B07PW8ZLHN/ref=sr_1_93?dchild=1&keywords=scarpa+climbing+shoe&qid=1618629505&sr=8-93 https://www.amazon.com/Climb-Apex-Climbing-Shoe-Yellow/dp/B07MQ79LNZ/ref=sr_1_9?dchild=1&keywords=climb+x+climbing+shoe&qid=1618629539&sr=8-9

The first thing to notice is that clean URLs are free of slredirect and picassoRedirect.html. When we split down a system of clean URLs, we can see that the base URL is accompanied by the product name as the first two sections of the URL. In contrary, “dirty” URLs include the query signifier, s?k=, immediately after the basic URL. Another point to note is that product URLs begin with https://www.amazon.com/, as opposed to the dirty URLs' last example, which did not use that string as its base URL. To filter out these bad URLs, some simple data cleaning using regex was developed.

# Remove sponsored product URLs.

re.search('slredirect',line.strip("\n")):

# Remove results page URLs.

re.search('s?k=',line.strip("\n")):

# Remove URLs that do not start with base URL.

line.strip("\n").startswith("https://www.amazon.com"):

# Remove URLs with gp or ap following its base URL.

re.search('https://www.amazon.com/gp/',line.strip("\n")):

re.search('https://www.amazon.com/ap/',line.strip("\n")):

Furthermore, there seem to be a lot of URLs pointing to almost the same product page (even though their URLs were not 100 percent identical). Consider the following scenario:

https://www.amazon.com/Sportiva-Ultra-Raptor-Running-Yellow/dp/B008I6J7YS/ref=sr_1_46?dchild=1&keywords=la+sportiva+climbing+shoe&qid=1618629379&sr=8-46#customerReviews https://www.amazon.com/Sportiva-Ultra-Raptor-Running-Yellow/dp/B008I6J7YS/ref=sr_1_46?dchild=1&keywords=la+sportiva+climbing+shoe&qid=1618629379&sr=8-46

The URL structure of Amazon is deconstructed in this blog post. In summary, only the “/dp/” and “ASIN” (Amazon Standard Identification Number) sections of URLs were significant. As a result, from the “ref=” section onwards, I erased everything. The final stage was to eliminate any URLs that directed to brands that were not part of this project. The data cleaning process yielded 425 clean, unique, and relevant URLs.

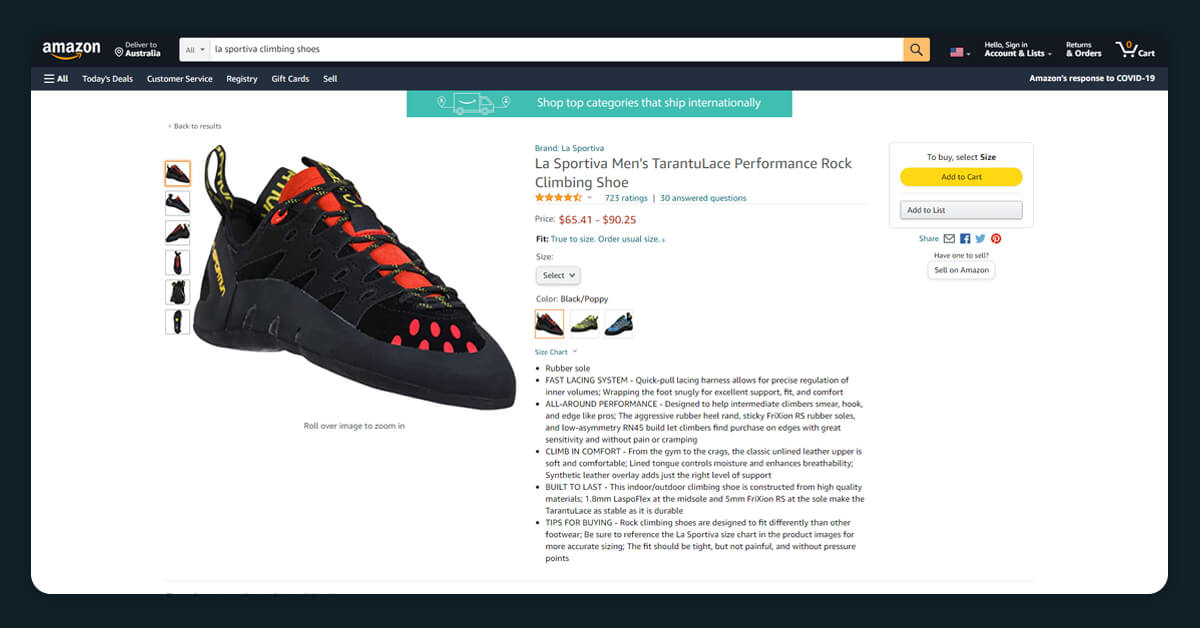

Part 2: Scraping Product Data

The flow of the scraping is as follows:

Go to the product page and scrape product title, product price.

Click on the “Sell all reviews” button and this will open a link.

Scrape all the reviews

On the other hand, Examining the flow of linkages, revealed potential duplication problems. Take a look at the two URLs below, each with a different ASIN.

https://www.amazon.com/Sportiva-Tarantulace-Climbing-Shoes-Black/dp/B081ZF79RK/ https://www.amazon.com/Sportiva-Tarantulace-Climbing-Shoes-Black/dp/B081XVFMZ5/

These hyperlinks are shown to refer to the same products based on the product name. The links on their individual review sites are identical.

https://www.amazon.com/Sportiva-Mens-TarantuLace-Performance-Climbing/product-reviews/B07BCK9CWR/ref=cm_cr_dp_d_show_all_btm?ie=UTF8&reviewerType=all_reviews https://www.amazon.com/Sportiva-Mens-TarantuLace-Performance-Climbing/product-reviews/B07BCK9CWR/ref=cm_cr_dp_d_show_all_btm?ie=UTF8&reviewerType=all_reviews

With this in mind, you can decide two or more product links lead to the same review page, only one of the product URLs should be scraped. The following are some code snippets to help you out:

# Scrapes the product name. prod_name = driver.find_element(By.ID, 'productTitle').text # Scrapes product price. prod_price = driver.find_element(By.ID,'priceblock_ourprice').text # Click translate reviews button. translate_button = driver.find_element(By.XPATH,'/html/body/div[1]/div[3]/div/div[1]/div/div[1]/div[5]/div[3]/div/div[1]/span/a') translate_button.click() # Scrape all reviews on the current page. reviews = driver.find_elements(By.XPATH,'//span[@data-hook="review-body"]') for review in reviews: product_reviews.append(review.text)

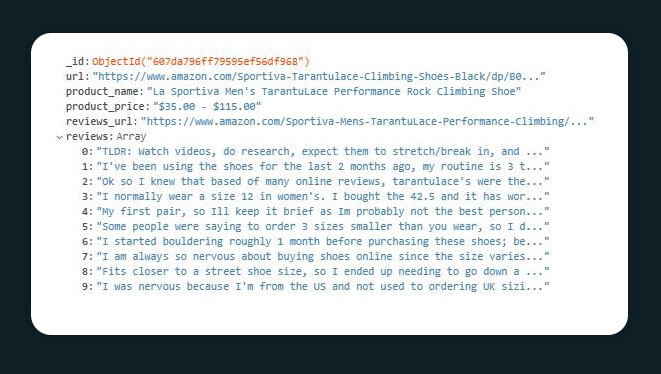

The result of the dataset will have the below structure:

Data Cleaning and Preprocessing

The initial step was to create two brand and model fields. The brand may easily be deduced from the product title. The extraction of the model, on the other hand, proved to be a difficult undertaking. First, we will sample 15 papers and analyze what a product name is made up of to identify the general components of a product name. To do so, we will use the MongoDB Query Language (MQL).

db.products.aggregate(

[{$match:{"reviews_url":{$ne:null}}},

{ $sample: { size: 15 } },

{$project:{"_id":0,"product_name":1}}]

)

This will deliver the subset as follows:

{ "product_name" : "La Sportiva Otaki, Women's Climbing Shoes" }

{ "product_name" : "La Sportiva TX2 Women's Approach Shoe" }

{ "product_name" : "Five Ten Men's Coyote Climbing Shoe" }

{ "product_name" : "La Sportiva TX4 MID GTX Hiking Shoe - Men's" }

{ "product_name" : "Five Ten Men's Stonelands VCS Climbing Shoe" }

{ "product_name" : "Scarpa Women's Gecko WMN Approach Shoe" }

It was time to sanitize the reviews after constructing the derived variables. A random sample of three reviews is shown below.

"I have narrow feet, but couldn't get my feet in these shoes. \nI prefer to wear comfortable climbing shoes, not meaning beginner shoes (though I'm a beginner). \nI asked around and talked with those die-hard climbers, they suggested me wear shoes that I fee comfortable with. \nI strongly agree. \nHow can you climb with shoes that hurt your feet? Not to speak of focusing on it.""I just started climbing regularly and these are great. Good grip.""Great shoes! They have good flexibility."

For text cleaning and preprocessing, Python's NLTK module is used. Customization of data cleaning activities to the algorithm is required for conducting sentiment analysis on Amazon reviews using VADER. In a nutshell, VADER assesses public sentiment by:

- Punctuation

- Capitalization

- Degree modifiers

- Conjunctions

- Words that flip the observations

Below is an example of a review that has had its stop words removed. It may be seen qualitatively that the review's meaning has been preserved.

Great aggressive shoe. I have a wider foot and these fit me great. The laces really allow me to tune the fit. I downsized a half size from my gym shoe size. Initially pretty uncomfortable, but a shower and a few climbing sessions and they fit just right.Great aggressive shoe . I wider foot fit great. The laces really allow tune fit. I downsized half size gym shoe size. Initially pretty uncomfortable, shower climbing sessions fit right.

We also wanted each page to represent a single model for a single brand. The fields name and model could be used as a composite key in a relational database. The code for deleting duplicate documents can be available at the above-mentioned GitHub repository.

We have used VADER to conduct sentiment analysis on the reviews in order to turn them into something quantitative. A short lesson on how to use it may be found here. The following was my code implementation:

def get_sentiment_score(review):

# Create a SentimentIntensityAnalyzer object.

sid_obj = SentimentIntensityAnalyzer()

# polarity_scores method of SentimentIntensityAnalyzer

# oject gives a sentiment dictionary.

# which contains pos, neg, neu, and compound scores.

sentiment_dict = sid_obj.polarity_scores(review)

print("sentence was rated as ", sentiment_dict['neg']*100, "% Negative")

print("sentence was rated as ", sentiment_dict['neu']*100, "% Neutral")

print("sentence was rated as ", sentiment_dict['pos']*100, "% Positive")

print("sentence has a compound score of ", sentiment_dict['compound'])

# decide sentiment as positive, negative and neutral

if sentiment_dict['compound'] >= 0.05 :

print("Sentence Overall Rated As Positive")

elif sentiment_dict['compound'] <= - 0.05 :

print("Sentence Overall Rated As Negative")

else :

print("Sentence Overall Rated As Neutral")

Here are two examples of the output:

"This shoe holds much better older Akasha’s. The fit requires size one euro size normal LS shoes. The cushion great long days rough terrain" sentence was rated as 0.0 % Negative sentence was rated as 70.8 % Neutral sentence was rated as 29.2 % Positive sentence has a compound score of 0.8591 Sentence Overall Rated As Positive"Get size bigger shoe sizes run small." sentence was rated as 0.0 % Negative sentence was rated as 100.0 % Neutral sentence was rated as 0.0 % Positive sentence has a compound score of 0.0 Sentence Overall Rated As Neutral

Analysis and Insights

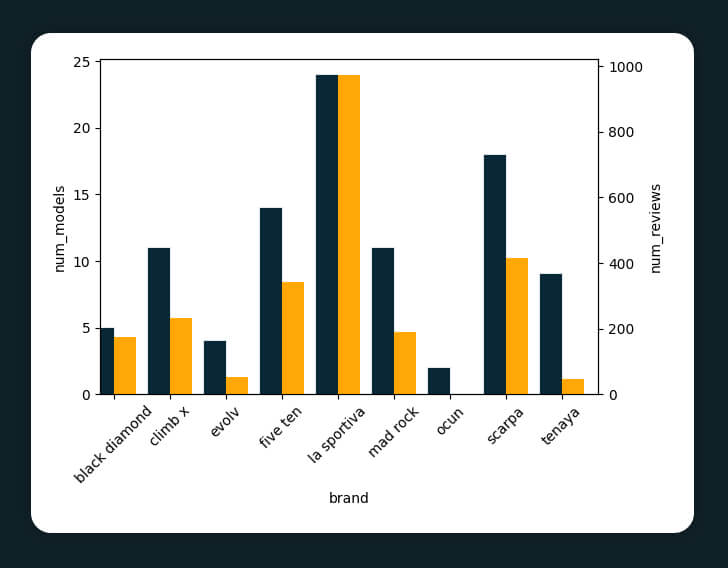

One of the influencing factors for me to make a purchase as an e-commerce consumer is the amount of reviews a product or a brand has on the site. The number of reviews for each brand is listed below. However, the number of models in the dataset varied by brand, which can have an impact on the number of reviews. As a result, we have created a dual-axis bar chart that included the number of models as well as the number of reviews for each brand. The number of models per brand is represented by the red bar, while the number of reviews is represented by the blue bar.

We can now study whether brands have reasonable amounts of reviews in relation to the number of models they sell, as indicated in the graph above. Not only do La Sportiva and Scarpa have the most reviews, but they also have the most models sold. Climb X and Mad Rock both sold almost the same number of models, however Climb X was found to have more positive reviews. Tenaya also had a small number of reviews relative to the number of models they sold. Black Diamond, Evolv, and Ocun all had five or fewer models, although Black Diamond had considerably more reviews than the other two manufacturers.

2. Which Models has Best Reviews?

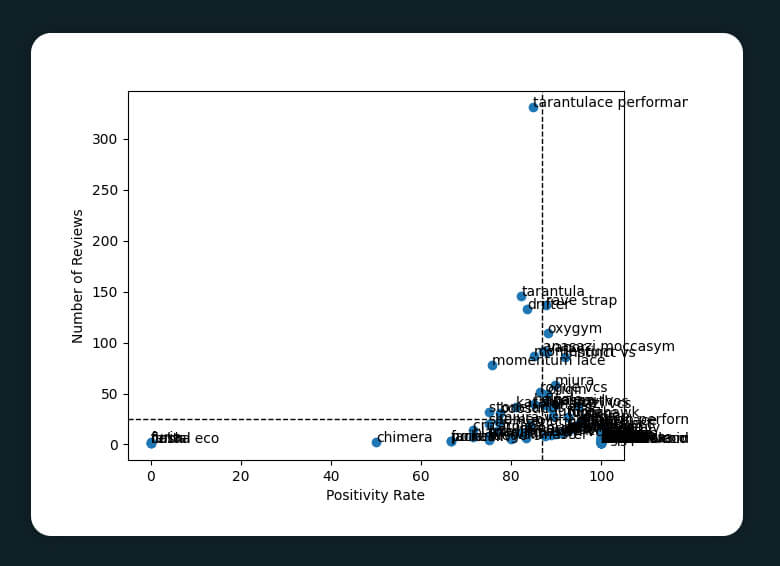

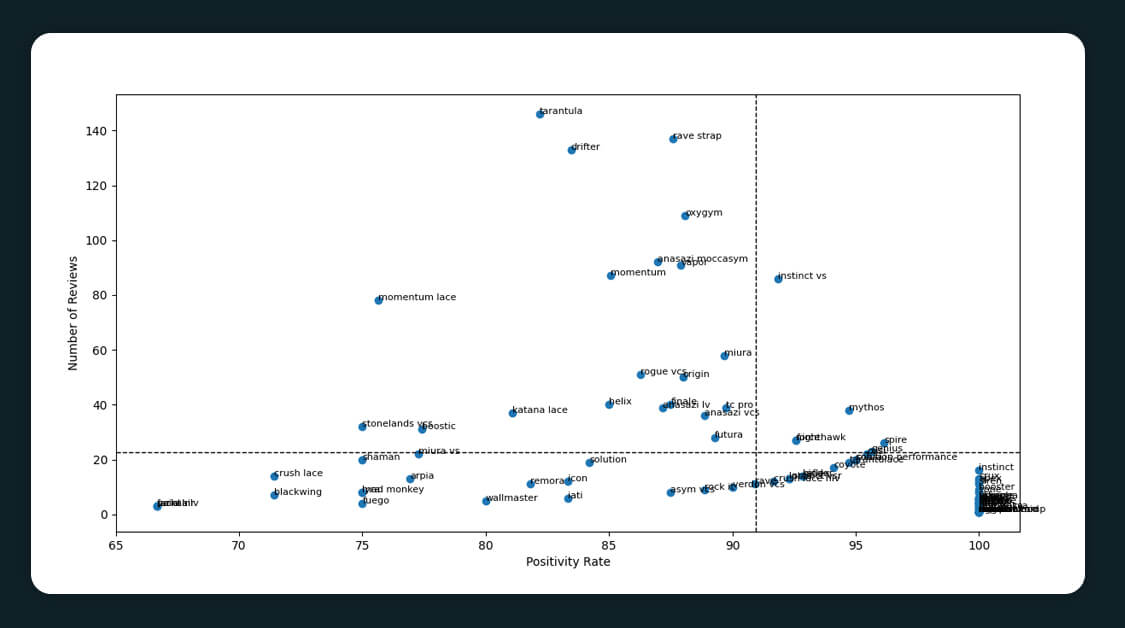

Knowing which model has the best reviews is not enough to buy that product. Ideally, it is necessary to know which the model with best reviews, hence here is the graph showing four quadrants. Every model to the left of the vertical line will show a below-average positivity rate and vice-versa.

The positivity rate for every model is calculated as the number of positive classifications which is divided by the number of reviews multiplied by 100.

As shown in the able plot, the positivity rate is 85, while average number of reviews is around 25. There are a few outliers, such as tarantula performance, which has a substantially higher number of reviews than the rest, and eco, which has a lower positive percentage, among others. To examine the models more thoroughly, I eliminate data points (i.e. models) with a positivity rate of less than 50 and more than 300 reviews.

With a better general opinion, we can now better observe the models. The models in Quadrant 1 (top right box) have a higher-than-average positivity rate and number of reviews. These models are the most popular with the general audience. Quadrant 2 (top left box) shows models that have received positive reviews but have a lower positivity rate than the average. Quadrant 3 (bottom left box) shows the models with the lowest positivity rate and number of reviews, whereas Quadrant 4 (bottom right box) shows several models with an above-average positivity rate but a low number of reviews.

This blog will help in identifying a pairs of climbing show models that are worth to search on Amazon. This framework is similar to other Amazon products with few keywords in the code.

Contact iwebscraping, today and request a quote!!