How to Utilize Web Scraping API using Vert.x and Jsoup in Kotlin and Monetize it?

Adding Jsoup in Graddle Project

As is customary, we begin by creating our Gradle project. This time, we'll include the Jsoup library as a dependency.

About Jsoup

Jsoup is a Java library for working with real-world HTML, according to its website — https://jsoup.org. It uses the finest of HTML5 DOM techniques and CSS selectors to create a highly easy API for requesting URLs, extracting, and modifying data.

Then there's our finished product.

build.gradle will seems to be.

buildscript {

ext.kotlin_version = '1.6.10'

ext.vertx_version = '4.2.5'

repositories {

mavenCentral()

}

dependencies {

classpath "org.jetbrains.kotlin:kotlin-gradle-plugin:$kotlin_version"

}

group = 'WebScraper'

version = '1.0.0'

}

plugins {

id 'org.jetbrains.kotlin.jvm' version '1.6.10'

id 'java'

}

repositories {

mavenCentral()

}

sourceCompatibility = JavaVersion.VERSION_11

targetCompatibility = JavaVersion.VERSION_11

dependencies {

// Kotlin

implementation 'org.jetbrains.kotlin:kotlin-stdlib'

// Vertx Core

implementation "io.vertx:vertx-core:$vertx_version"

implementation "io.vertx:vertx-lang-kotlin:$vertx_version"

// Vertx Web

implementation "io.vertx:vertx-web:$vertx_version"

// Vertx Rxjava

implementation "io.vertx:vertx-rx-java3:$vertx_version"

implementation "io.vertx:vertx-rx-java3-gen:$vertx_version"

// Jsoup

implementation 'org.jsoup:jsoup:1.14.3'

}

jar {

duplicatesStrategy = DuplicatesStrategy.EXCLUDE

manifest {

attributes 'Implementation-Title': group

attributes 'Implementation-Version': archiveVersion

attributes 'Main-Class': 'AppKt'

}

from {

configurations.runtimeClasspath.collect { it.isDirectory() ? it : zipTree(it) }

}

}

task cleanAndJar {

group = 'build'

description = 'Clean and create jar'

dependsOn clean

dependsOn jar

}

compileKotlin {

kotlinOptions {

jvmTarget = JavaVersion.VERSION_11

}

}

Source File Structures

This is how our source files will be organized.

- src | - main | | - java | | - kotlin | | | - verticle | | | | - HttpServerVerticle.kt | | | | - MainVerticle.kt | | | - App.kt

The main function that will operate the service is App.kt.

Our REST API code will be written in the class HttpServerVerticle.kt.

The primary Vert.x instance that will execute the HttpServerVerticle class is MainVerticle.kt.

Executing Web Scraping Services

In our HttpServerVerticle class, we'll use Jsoup to construct the web scraper.

package verticle

import io.vertx.core.Promise

import io.vertx.core.impl.logging.LoggerFactory

import io.vertx.core.json.JsonArray

import io.vertx.core.json.JsonObject

import io.vertx.rxjava3.core.AbstractVerticle

import io.vertx.rxjava3.ext.web.Router

import io.vertx.rxjava3.ext.web.RoutingContext

import org.jsoup.Jsoup

import java.text.SimpleDateFormat

class HttpServerVerticle : AbstractVerticle() {

private val logger = LoggerFactory.getLogger(HttpServerVerticle::class.java)

override fun start(promise: Promise) {

val router = Router.router(vertx).apply {

get("/web-scraper/scrape").handler(this@HttpServerVerticle::scrape)

}

vertx

.createHttpServer()

.requestHandler(router)

.rxListen(8282)

.subscribe(

{ promise.complete() },

{ failure -> promise.fail(failure.cause) })

}

private fun scrape(context: RoutingContext) {

val responses = JsonArray()

responses.addAll(scrapeCnbc())

responses.addAll(scrapeGeekWire())

responses.addAll(scrapeTechStartups())

context.response().statusCode = 200

context.response().putHeader("Content-Type", "application/json")

context.response().end(responses.encode())

}

private fun scrapeCnbc(): JsonArray {

val responses = JsonArray()

val document = Jsoup.connect("https://www.cnbc.com/startups/").get()

val pageRowElements = document.select("div.PageBuilder-pageRow")

for (pageRowElement in pageRowElements) {

try {

val moduleHeaderTitle = pageRowElement.selectFirst("h2.ModuleHeader-title")

if (moduleHeaderTitle?.text().equals("More In Start-ups")) {

val elements = pageRowElement.select("div.Card-standardBreakerCard")

for (element in elements) {

val title = element.selectFirst("a.Card-title")

val datePost = element.selectFirst("span.Card-time")

val dateFormat = SimpleDateFormat("EEE, MMM d yyyy")

val dateString = datePost?.text()?.replace("st|nd|rd|th".toRegex(), "")

val date = dateFormat.parse(dateString)

responses.add(JsonObject().apply {

put("title", title?.text())

put("link", title?.attr("href"))

put("timestamp", date.time)

})

}

}

} catch (e: Exception) {

logger.error(e.message)

}

}

return responses

}

private fun scrapeGeekWire(): JsonArray {

val responses = JsonArray()

val document = Jsoup.connect("https://www.geekwire.com/startups/").get()

val articleElements = document.select("article.type-post")

for (articleElement in articleElements) {

try {

val title = articleElement.selectFirst("h2.entry-title > a")

val datePost = articleElement.selectFirst("time.published")

val dateFormat = SimpleDateFormat("MMM d, yyyy")

val dateString = datePost?.text()

val date = dateFormat.parse(dateString)

responses.add(JsonObject().apply {

put("title", title?.text())

put("link", title?.attr("href"))

put("timestamp", date.time)

})

} catch (e: Exception) {

logger.error(e.message)

}

}

return responses

}

private fun scrapeTechStartups(): JsonArray {

val responses = JsonArray()

val document = Jsoup.connect("https://techstartups.com/").get()

val postElements = document.select("div.post.type-post")

for (postElement in postElements) {

try {

val title = postElement.selectFirst("h5 > a")

val datePost = postElement.selectFirst("span.post_info_date")

val dateFormat = SimpleDateFormat("MMM d, yyyy")

val dateString = datePost?.text()?.replace("Posted On ", "")

val date = dateFormat.parse(dateString)

responses.add(JsonObject().apply {

put("title", title?.text())

put("link", title?.attr("href"))

put("timestamp", date.time)

})

} catch (e: Exception) {

logger.error(e.message)

}

}

return responses

}

}

val document = Jsoup.connect("https://www.geekwire.com/startups/").get()

The web address is sent to the connect() function. The entire HTML DOM is then obtained using the get() function.

Then, on line 83, we'll use css selector to build a query.

val articleElements = document.select("article.type-post")

Selector in the JQuery library in Javascript is comparable to this.

The elements that match the selection will be found by the select () function. Yes, an array is returned. So, in the following line, we'll iterate it.

The following code may be found on line 87.

val title = articleElement.selectFirst("h2.entry-title > a")

Only one element that meets the selection is returned using the selectFirst() function. As a result, we must verify that the selector exists only within one of the articleElements.

The data that has been scraped is then stored in a JsonArray object on line 94.

responses.add(JsonObject().apply {

put("title", title?.text())

put("link", title?.attr("href"))

put("timestamp", date.time)

})

MainVerticle.kt & App.kt

These are the Vert.x standard classes and methods for deploying our service.

package verticle

import io.vertx.core.Promise

import io.vertx.rxjava3.core.AbstractVerticle

import io.vertx.rxjava3.core.RxHelper

class MainVerticle : AbstractVerticle() {

override fun start(promise: Promise) {

RxHelper.deployVerticle(vertx, HttpServerVerticle())

.subscribe(

{ promise.complete() },

promise::fail)

}

}

import io.vertx.core.Launcher

import verticle.MainVerticle

fun main() {

Launcher.executeCommand("run", MainVerticle::class.java.name)

}

Merge and Execute the Web Scraper

After you've finished scripting, build it, and execute the cleanAndJar Gradle process.

Simply run: if you already have your jar file.

$ java -jar build/libs/WebScraper-1.0.0.jar

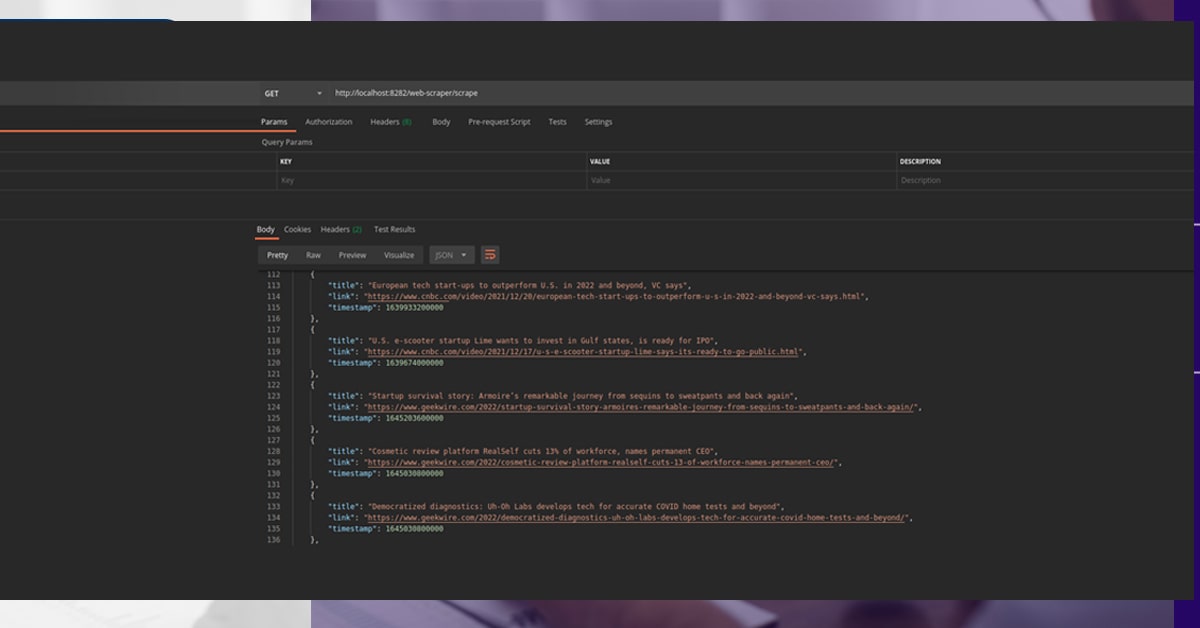

It's time to put our web scraper API to the test. Postman is what you use to test the outcome of what we've constructed.

You can contact iWeb Scraping, for any web scraping service requirements and request for a quote!