Python-backed Walmart Product Data Scraping: A Simple Overview

Introduction

Walmart is the 'tour de force' of the global retail chain. Since its inception in 1962, Walmart has become a household name and one of the best-known brands in the world. Currently, Walmart has 10500 stores worldwide and attracts about 240 million customers per week globally. One of the fascinating aspects of Walmart is that it presents a wealth of public data available for use by other enterprises and commercial ventures. The best way to co-opt Walmart's data is by web scraping. What is Walmart web scraping, and how can it help your business? Let us find out!

Introduction to Walmart Product Data Scraping

In a layperson's language, web scraping is an automated method to extricate enormous data from websites. Most of the data on a website is in an unstructured format. However, scraping helps collect unstructured data and convert it into a structured format in a database or spreadsheet. Unlike the exhausting process of manually obtaining data, web scraping appropriates AI and similar technology to extract millions of data sets in a much smaller period. There are multiple ways of scraping website data, such as online services, APIs, personalized code, and even Python. The millions of product or customer-related data publicly available on Walmart can be scraped for various benefits, some of which are mentioned below.

Top Four Benefits of Walmart Product Data Scraping

Retailers of all types and scales can scrape Walmart data for an assortment of advantages. It can help strategize product prices, competitor tracking, etc.

- Price Comparison – Walmart is considered the gold standard for affordable pricing in the USA and many other regions of the world. Thus, with Walmart product data scraping, you can collect metrics on product prices and analyze them to devise an excellent pricing plan for your own products or online retail store.

- Email Address Gathering – Many retailers, including Walmart, use email as a medium of marketing. Web scraping lets you collect email IDs and send bulk mail for promotional purposes.

- Social Media Scraping – It is also possible to scrape data from Walmart's social media pages on LinkedIn and Twitter to find out which products are trending.

- Research and Development – Another veritable benefit of scraping data from Walmart's website is that you can analyze them to conduct surveys or Research and Development for your own retail products, retail chain, online store, etc.

Now that we know why Walmart data scraping is beneficial, let us delve into the various ways to scrape data from a website.

Top Ways to Scrape Data from a Website

When you run the code for web data scraping, a request is forwarded to the URL you typed or mentioned. In response to the request, the server sends the data and allows you to read the HTML or XML page. The code then parses the HTML or XML page and finds and extracts the data. Thus, to extricate data using Python-based web scraping, you have to follow the simple steps mentioned below –

- Find the URL (Walmart) that you want to scrape

- Inspect the page

- Find the data you want to extract

- Write the code

- Run the code and extract the data

- Store the data in a desirable format

The Top Three Libraries Useful for Web Scraping

It is common knowledge that Python has diverse applications, and there are different libraries for various purposes. The most useful Python libraries for Walmart product data scraping are-

- Pandas – It is a library that you can co-opt for data manipulation and analysis. Pandas is used to extract data and store it in a format of your choice.

- Selenium – It is a web testing library. You can use Selenium to automate browser activities.

- Beautiful Soup – It is a Python package for parsing HTML and XML documents. Beautiful Soul creates 'parse trees' that are invaluable for daily or regular data extraction.

Twelve Steps to Webscrape Walmart Data Using Python

Now that we have a crisp idea of Python-backed web scraping, let us examine the steps to achieve the same with a small tutorial. Firstly, you must create a folder and install all the libraries, such as Requests, Beautiful Soup, etc., you might need during the guide. For the purpose of the tutorial, let us install two libraries – Requests for HTTP connection with Walmart and Beautiful Soup to create an HTML tree for seamless data extraction. Inside the folder, you can create a Python file where the code will be written. Let us assume that we want to scrape the following data from a specific Walmart page –

- Name

- Price

- Rating

- Product Details

Then, we have to follow the steps outlined below –

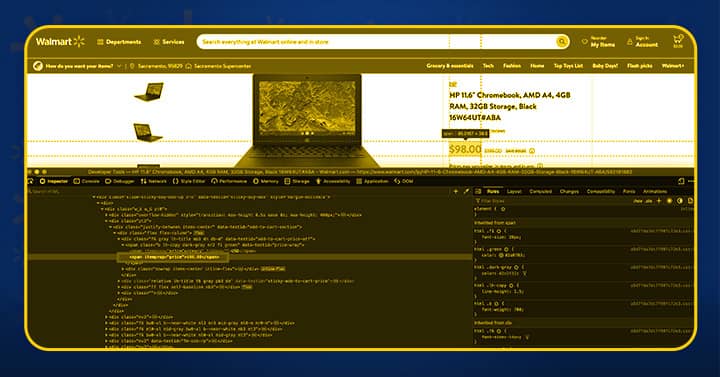

1. The first step is to find the locations of the aforementioned elements in the HTML code via thorough inspection.

2. The name will be saved as 'tag h1' with itemprop as the attribute. Then, it is time to see where the price is stored.

3. A "span tag" with the attribute itemprop and the value "price" is commonly used to hold the price.

4. Likewise, product detail is stored under 'div tag' with class dangerous-html. Send a standard GET request to the destination page and wait for its response.

5. After you make a GET request to Walmart, they may present you with a captcha if they suspect you are not using a browser but rather a script or crawler. To overcome this hurdle, you can simply send metadata or headers that will make Walmart consider the request legitimate and coming from a licit browser.

6. Then, to bypass Walmart's security wall, you can use seven different headers, namely –

- Accept

- Accept-Encoding

- Accept-Language

- User-Agent

- Referer

- Host Connect

7. After that, you might notice Walmart sending a 200-status code even after the Captcha is returned. To tackle the problem, you can use an if/else statement. When a captcha is presented, and the response is "True," like in this case, the request was unsuccessful at Walmart; otherwise, it was successful. The following step includes extracting the data of interest.

8. As we have already gauged the precise location of all the data elements, the next step is to use BS4 to scrape every value systematically.

9. For instance, after extracting the 'price' and replacing 'Now' (garbage string) with an empty string, you can co-opt the 'try/except' statements to grasp any errors. Similarly, you can extract the data regarding the name and the rating of the product.

10. After you scroll down to the HTML data returned by the Python script, you will not find the 'dangerous-html class' because the Walmart website uses the Nextjs framework that sends JSON data once the page has been rendered completely. Thus, when the socket connection was broken, the product description aspect of the website was not loaded. However, the problem has an easy solution and can be scraped in two simple steps. Every Nextjs-backed website has a script tag with the id - _NEXT_DATA_.

11. The script will return all the JSON data required, which is made possible with Javascript and cannot be scraped with a simple HTTP GET request. Thus, the key is to find it using BS4 and then load it using the JSON library.

12. The gargantuan amount of JSON data might seem intimidating at first. But, tools such as JSON viewer make it easy to figure out the precise location of the desired object. Thus, we have all the data we were scouring for, including the Walmart product data scraped earlier.

Conclusion

So, there we have it, a crisp overview of Walmart product data scraping using Python. As it is clear by now, Python is an excellent tool for web scraping. It comes with many possibilities; all you need is the right mindset to explore the same.