How Web Scraping is used to Scrape Amazon and Other large E-Commerce Websites at a Large Scale?

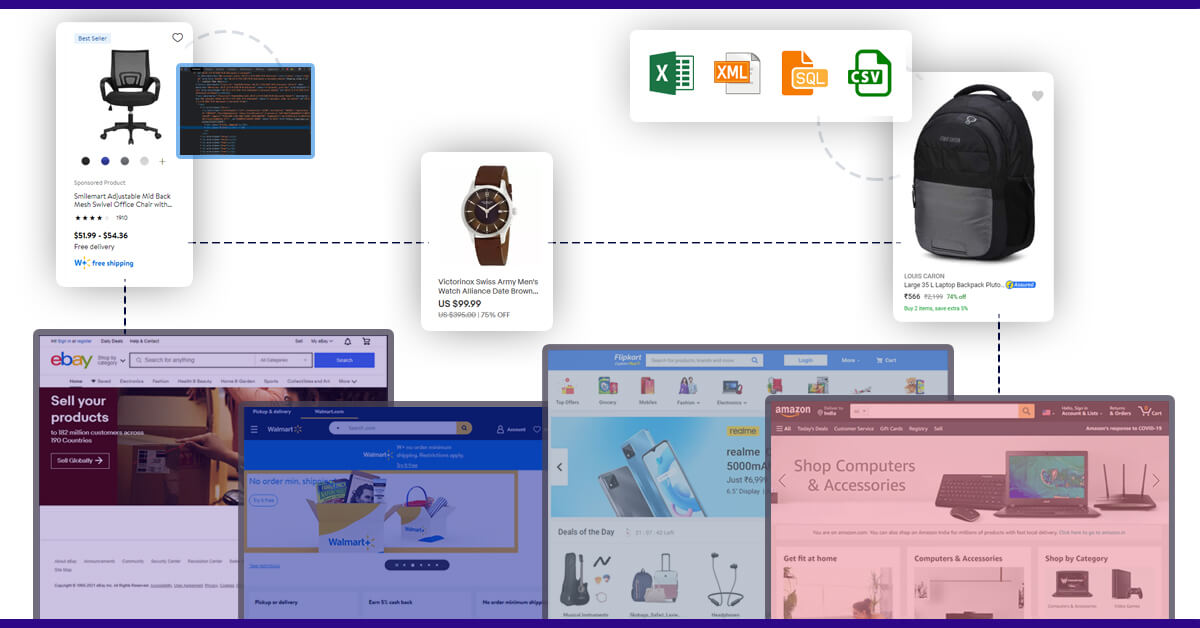

The e-commerce industry is becoming increasingly data-driven. Product data scraping from Amazon and other large e-commerce websites is indeed an important piece of competitor analysis. Amazon alone has a huge amount of data (120+ million as of today!). Extracting this data regularly is a massive undertaking.

We work with many customers at iWeb Scraping to help them gain data access. However, for a variety of reasons, some people require the formation of an in-house team to extract data. This blog post is for those who have to know how to set up and scale an in-house team.

Assumptions

These suppositions will give us an idea of the scale, hard work, and challenges we will face.

- You want to extract information about the product from 20 large e-commerce internet sites, including Amazon.

- Data from a website is required for 20 – 25 subcategories within the electronics category. The overall number of subcategories is around 450.

- The refresh frequency varies depending on the subcategory. 10 of the 20 subcategories require daily refresh, 5 require data every 2 days, 3 require data every 3 days, and 2 require data once a week.

- Four internet sites that use anti-scraping technologies.

- Depending on the day of the week, the amount of data ranges from 3 million to 7 million per day.

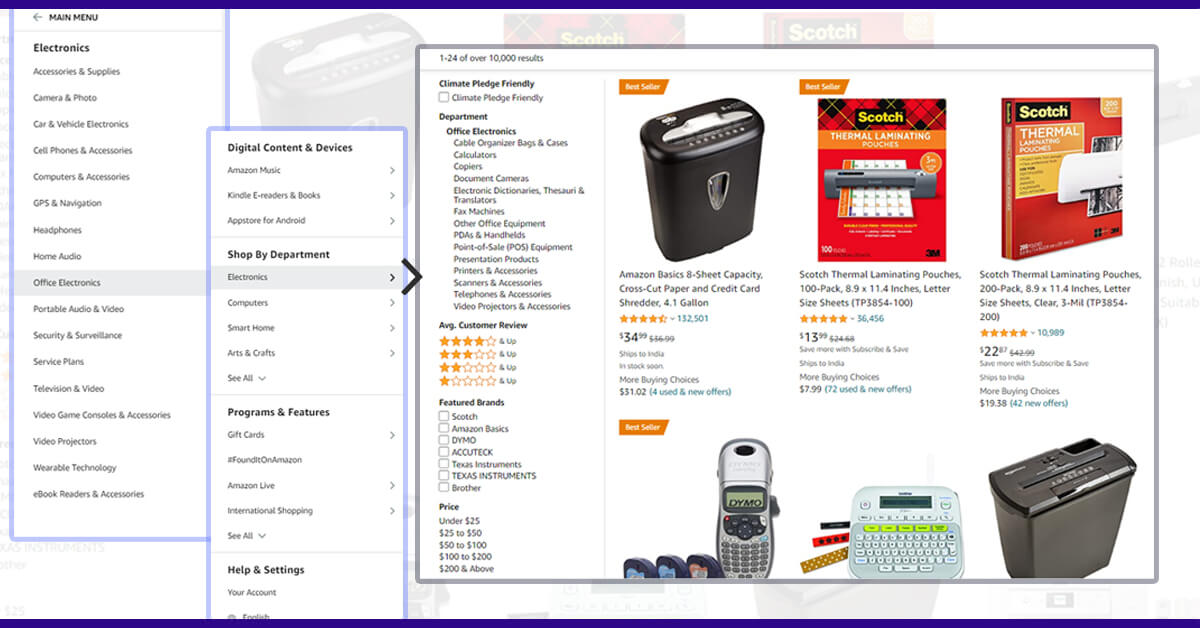

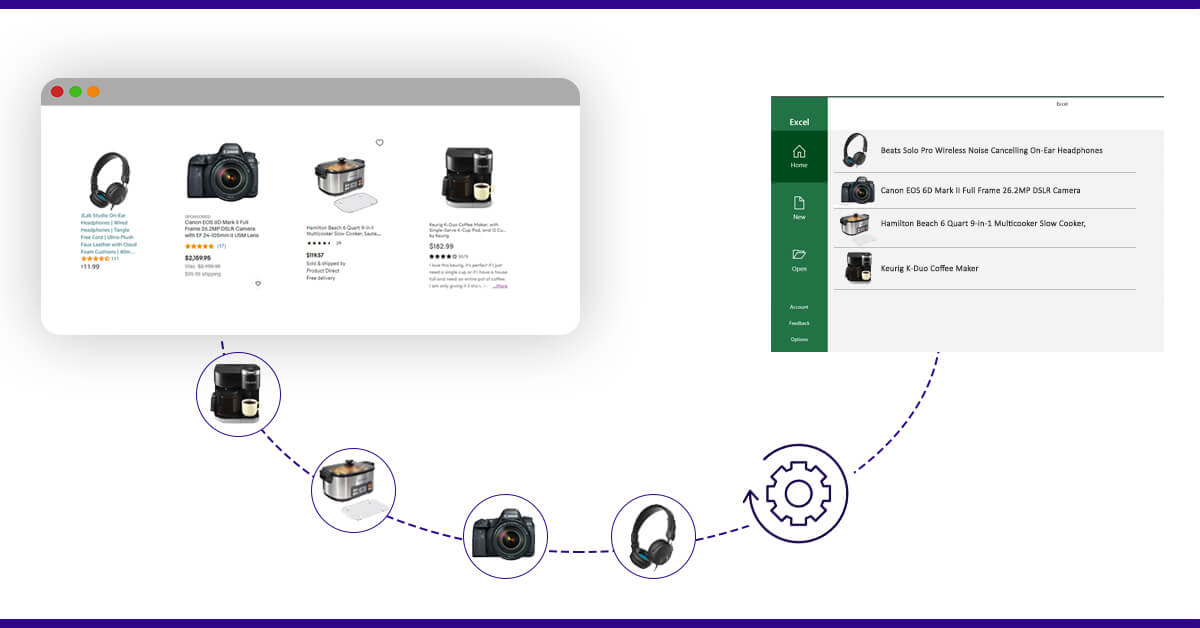

Learning E-Commerce Data fields

We must comprehend the information we are gathering. For the sake of demonstration, let's use Amazon. Take note of the fields that must be extracted:

- Product URL

- BreadCrumb

- Product Name

- product Description

- Pricing

- Discount

- Stock Details

- Image URL

- Ratings

The Periodicity

The refresh frequency varies depending on the subcategory. Ten of the twenty subcategories (from one website) require daily refresh, five require data every two days, three require data every three days, and two require data once a week. The intensity may change later, depends entirely on how the priorities of the business teams change.

Understanding the Requirements

When we work with our enterprise customers on large data extraction projects, they always have particular requirements. These are carried out to ensure internal performance standards or to increase the efficiency of an established factor.

Here mentioned are few common special requests.

- Make a copy of the retrieved HTML (unparsed data) and save it to a cloud storage service like Dropbox or Amazon S3.

- Create an integration with a tool to track the extraction of data. Integrations could vary from a small Slack integration to inform when data transmission is finished to the creation of a complicated pipeline to Information systems.

- Taking screenshots from the product page.

If you have such needs now or in the long term, you must plan ahead of time. A common example is backing up to evaluate it later.

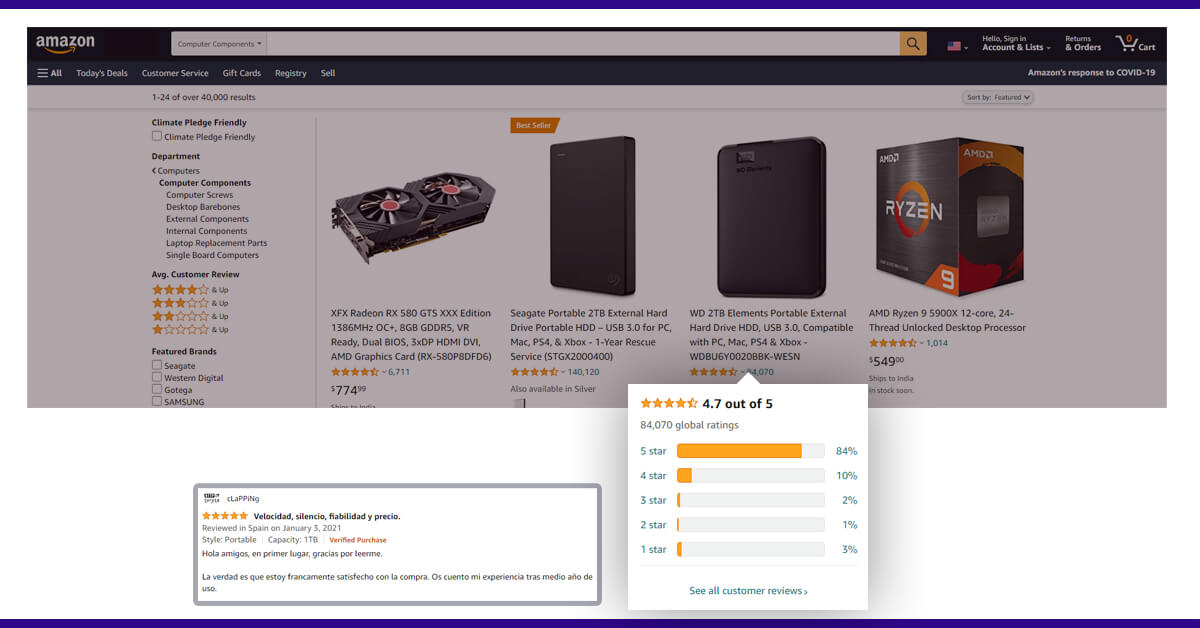

Reviews

In certain cases, you will also need to retrieve reviews. Analyzing evaluations is a common use case for enhancing brand value and reputation. Review harvesting is a unique case, and most teams overlook this during the project planning stage, resulting in budget overruns.

Reviews Specialty

There might be multiple reviews for the famous product. If you want to retrieve all the reviews you will need to send requests.

The Data Crawling Process

A web scraper is prepared to depend on the structure of a website. In common language, you send a request to the website, the website in return will send an HTML page, and you can scrape the information from HTML.

You may also need to gather reviews in some circumstances. Analyzing evaluations is a typical way to improve the value and reputation of a brand. Review gathering is a one-of-a-kind situation, but most teams fail to consider it at the planning phase of the project, resulting in material wastage.

Everything changes when you're dealing with massive volumes, such as 5 million products per day.

Web Data Extracting Process

1. Creating and Maintaining Scrapers

Scrapers can be written in Python to retrieve features from e-commerce websites. In our scenario, we need to retrieve information from a website's 20 subcategories. To acquire the information, you'll need several parsers in your scraper, depending on structural variances.

The pattern of categories and subcategories on Amazon and other large e-commerce websites changes often. As a result, the person in charge of managing web scrapers must make frequent changes to the scraper code.

When the business team adds new categories and websites, one or two people of your team should create scrapers and parsers. Scrapers need to be adjusted every few weeks on average. The fields you scrape would be affected by a minor modification in the structure. Depending on the scraper's logic, it could either give you partial data or crash it. And, in the end, you'll have to create a scraper management system.

Web scrapers work by analyzing the structure of a website. Each website will uniquely provide facts. To deal with all of this chaos, we need a single language and a consistent format. This format will change over time, so be sure you get it properly the first time.

The key to ensuring that data delivery is completed on time is to detect changes early enough. You'll need to create a tool to detect pattern changes and notify the scraper team. To detect changes, this tool should run every 15 minutes.

2. Scraper and Big Data Management Systems

Using a terminal to manage a large number of scrapers is not a smart idea. You need to figure out how to deal with them in a useful way. At iWeb Scraping, we created a graphical user interface (GUI) that can be used to configure and manage scrapers without having to use the console every time.

Managing huge amounts of data is challenging, and you'll need to either develop your own data warehousing architecture or use a cloud-based service like Snowflake.

3. Automated Scraper Generator

After you've created a large number of scrapers, the next challenge is to increase your scraping framework. You can use common structural patterns to develop scrapers more quickly. Once you have a large number of scrapers, you should consider creating an auto scraper framework.

4. Anti-Scraping and Variation in Anti-Scraping

Anti-scraping technologies will be used on websites to prevent/make it harder to retrieve features, as stated in the introduction. Either they create their IP-based blocking solution or they use a third-party service. It's not easy to get around anti-scraping on a large basis. You'll need to buy a lot of IPs and cycle them effectively.

You'll need two items for a project that requires 3-6 million records each day:

- You'll need someone to handle the ports and IP rotator, as if they're not properly managed, your IP purchasing bill will increase.

- You'll need to work with three to four IP vendors.

E-commerce websites may occasionally restrict a range of IP addresses, causing your data transmission to be disrupted. Use IPs from several manufacturers to avoid this. To ensure that we have adequate IPs in our pool, iWeb Scraping has worked with more than 20 providers. You should select how many IP partners you require based on your scale.

Rotating IP addresses will not sufficient. Bots can be blocked in a variety of methods, and e-commerce websites are constantly updating their policies. To uncover answers and keep the scraper going, you'll need someone with a research mindset.

Queue Management

You can afford to make requests in a loop while scraping data on a modest scale. You can send 10 requests every minute and still acquire all the information you require in a few hours. At the scale of millions of products every day, you don't have this luxury.

The crawling and parsing portions of your scrapers should be split and performed as distinct activities. If a component of the scraper fails, that component can be re-executed separately. To do it properly, you'll need to employ a queue management system like Redis or Amazon SQS. The most common application is to delete unsuccessful requests.

To speed up the data extraction process, you should process the crawled URLs in parallel. When you're using Python, you can speed up the process by using a threading interface package like Multiprocessing.

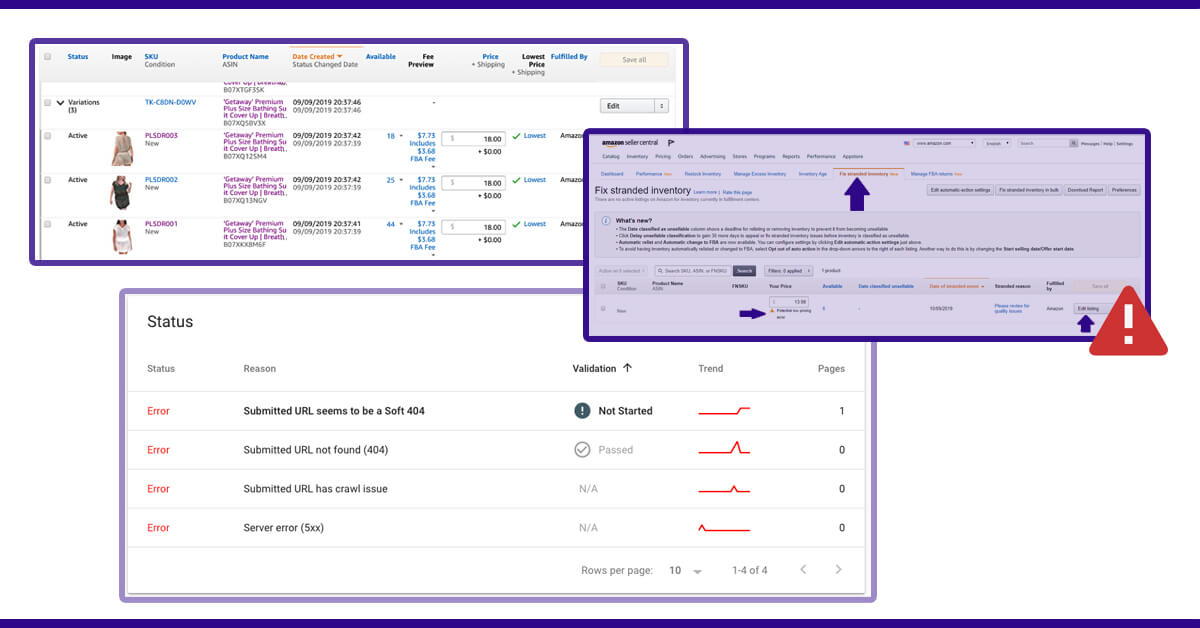

Data Quality Challenges

The business team that consumes the information is the one that is worried about data quality. Their task is made more difficult by inaccurate data. Data quality is frequently overlooked by the data extraction team until a big issue arises. If you're using this data on a live product or for a client, you'll need to set up very strict data quality rules right at the start of the project.

Records that do not fulfill the quality requirements will have an impact on the data's overall integrity. It's tough to ensure that data fulfill quality guidelines during crawling because it has to be done in real-time. If you're utilizing faulty data to make business decisions, it can be disastrous.

Here are some of the most typical problems found in product data scraped from e-commerce websites:

1. Duplication

Duplicates will likely appear when collecting and aggregating data, depending on scraping logic and how well Amazon plays. This is a pain at times for data analysts. You must locate and eliminate them.

2. Data Validation Errors

The field you're crawling should be a number, but it turned out to be a text when you scraped it. Data validation errors are the name for this type of issue. To detect and flag these types of issues, you'll need to create rule-based test frameworks. Every data item's data type and other features are defined at iWeb Scraping. If there are any irregularities, our data validation tools will alert the project's QA team. All of the things that have been highlighted will be carefully examined and reprocessed.

3. Coverage Errors

There's a good probability you'll miss a lot of items if you're collecting millions of products. Request failures or poor scraper logic design could be at blame. Item coverage discrepancy is the term for this. It's possible that the data you harvested didn't include all of the required fields. Field coverage discrepancy is what we call it. These two types of mistakes should be detectable by your test framework.

For self-service tools and data as a service powered by self-service tools, coverage inconsistency is a big issue.

4. Product Errors

Multiple varieties with the same product may need to be scrapped in some situations. There may be data inconsistency between multiple varieties in some circumstances. Data confusion is caused by the lack of data availability and the representation of data in a different way.

Featuring data throughout the metric and SI systems, for example. Currency variations.

E.g., there can be differences in RAM size, color, pricing, and other aspects of a mobile phone.

This is a problem that your Q&A team framework should address as well.

5. Site Variations

Amazon and other huge e-commerce websites constantly alter their strategies. This might be a modification that affects the entire site or just a few categories. Scrapers need to be adjusted every several weeks because a little change in the structure can influence the fields you scrape and result in inaccurate data.

There's a potential the website's pattern will change while you're mining it. The data being scraped may be corrupted if the scraper does not crash (the data scraped after the pattern change).

You'll need a pattern change detector to identify the change and stop the scraper if you're establishing an in-house team. After making the necessary tweaks, you may resume scraping Amazon, saving a significant amount of money and processing resources.

Data Management Challenges

Managing large amounts of data poses a several difficulties. Even if you already have the data, storing and using it presents a new set of technical and practical obstacles. The volume of data you collect will only grow in the future. Organizations will not be able to get the most value out of massive amounts of data unless they have a proper foundation in place.

1. Data Storage

For processing, you'll need to store data in a database. Data will be retrieved from the database by your Q&A tools and other applications. Your database must be fault-tolerant and scalable. You'll also need a backup mechanism to access the data if the primary store fails. There have even been reports of malware being used to retain the company accountable. To handle each of the above scenarios, you'll need a backup for each record.

2. Recognizing the Importance of a Cloud-Based Platform

If your business relies on data, you'll need a data collection platform. You can't always send scrapers to the terminal. Here are some reasons whether you should start constructing a platform as soon as possible.

3. Increasing Data Frequency

If you need data regularly and want to manage the scheduling process, you'll need a scraper platform with an integrated scheduler. Even non-technical persons can start the scraper with a single click of a button if it has a graphic user interface.

4. Reliability Needed

It is not a good idea to use scrapers on your local machine. For a consistent source of data, you'll need a cloud-hosted platform. To create a cloud-hosted platform, use Amazon Web Services or Google Cloud Platform's current services.

5. Types of Anti-Scraping Technologies

To avoid anti-scraping technology, you'll need to be able to integrate tools, and the simplest way to accomplish that is to connect their API to your cloud-based platform.

6. Data Exchange

If you can combine your data storage with Amazon S3 Azure storage or similar services, you can manage data exchange with internal stakeholders. The majority of analytics and data preparation products on the market offer native connections with Amazon S3 or Google Cloud Platform.

7. DevOps

DevOps is the first step in the development of any program, and it used to be a time-consuming procedure. Not any longer. AWS, Google Cloud Platform, and other similar services offer a set of versatile tools to assist you in developing apps more quickly and reliably. These services make DevOps, data platform management, application and scraper code deployment, and application and infrastructure performance monitoring easier. It's always better to pick a cloud platform and use their services based on your needs.

8. Management of Change

Depending on how the scraped data is used by your company team. Change is an unavoidable part of life. These changes could be in the data structure, the refresh frequency, or something else entirely. The management of these changes is heavily reliant on processes. Our experience has shown us that the best way to handle change is to get two things right.

- Use a single contact : if your team is larger than ten members. However, if you need to make a change, you should only contact one individual. This person will allocate duties and ensure that they are completed.

- Use a ticketing tool : Internally, we discovered that using a ticketing solution is the best method to handle change management. If a change is required, create a new ticket, collaborate with stakeholders, and close the ticket.

9. Management of a group

It's difficult to lead a process-driven team for a large-scale web scraping APIs project. However, here's a general notion of how a web scraping job should be divided up.

10. Team Structure

You will need the below kind of people to manage every part of the data crawling process.

- Data Crawling Experts : Web scrapers are written and maintained by data scraping specialists. We'll require 2-3 persons to work on a large-scale web scraping project with 20 domains.

- Engineer for the Platform : To construct the data extraction platform, you'll require a professional platform engineer. He also plans to connect the platform to other services.

- Anti-Scraping Experts : To solve anti-scraping issues, you'll need someone with a research mindset. He'll also look for new tools and services to try out to determine if they help against anti-scraping.

- Engineer and QA : The engineer, Q&A, will be in charge of developing the Q&A framework and assuring data quality.

- Team leader : The team leader should be knowledgeable in both technical and functional areas. A strong communicator who appreciates the need of delivering accurate data.

11. Resolution Conflict

Building a team is difficult; managing them is even more difficult. We strongly support Jeff Bezos' "disagree and commit" mindset. This mindset may be broken down into a few simple elements that can help you develop an in-house team.

Various people have different ideas about how to tackle a problem. Assume that one of your team members has to use Solution A to tackle a problem, while another needs Solution B. Both methods appear rational, each with its own set of advantages and disadvantages. Both members of the team will compete for their preferred options.

For leaders, this is a constant source of frustration. In your team, the last thing you need is ego and politics.

Here are a few factors that complicate the situation:

- You can't choose between A and B at the same time, so pick one.

- Someone will be upset if they choose one solution over the other

- How can you persuade the disgruntled employee to join the team and produce his best work?

Improved Efficiency Through Transference

It's critical to keep the data team and the business team apart. If a team member (other than the executive or project manager) is active in both, the project is doomed to fail. Allow the Data team to do what they do best, and the Business team to do what they do best.

Do you require a free consultation? Please contact us right away.