Use cURL for web scraping: A Beginner's Guide

Get ease in collecting the data from a website using cURL, i.e., Client URL. The cURL uses HTTP, IMAP, POP3, SFTP, SCP, TFTP, TELNET, FILE, or LDAP protocols. Without any user interaction, it helps in automation. Businesses can use cURL for remote file uploading or downloading and API interaction.

The cURL was developed in 1997 by Daniel Stenberg. It is popular due to the simplicity, flexibility, and handling of data responses and requests. The tool is highly versatile as the commands can be customized, making the data transfer across various applications smooth.

Going ahead, we'll put in light cURL in detail for better understanding—a thorough explanation of how it can be used for web scraping tasks.

What is cURL?

Client URL i.s. cURL, a command line tool. It enables the exchange of data from a device to a server via a terminal. Whenever you use CLI, a command interface, a user gets specified about a server URL and the data they are willing to transfer to a server URL.

Top Features of cURL:

cURL, the command-line tool, allows data transfer between the servers. On the majority of the operating systems, this open-source software is free to use. The valuable features of cURL:

Smooth Validation

cURL allows validation via various methods such as NTLM, Kerberos, digest, and basic. All these methods authenticate web servers like FTP servers, web servers, and different other servers.

Proxy

cURL supports proxies like HTTPS, SOCKS4, SOCKS5 and HTTP proxies. The proxy servers can be configured for all requests or specific requests.

Easy Downloads

cURL is quick to download. Even if there is a network error, the downloads resume from where they left off.

Cookie Handling

cURL supports cookies, making receiving and sending cookies between servers easy.

Bandwidth Control

cURL controls the bandwidth at the time of transfers. The transfer limit can be reduced to a specific value with successful configuration.

Debugging

cURL helps developers in resolving troubleshooting errors with the applications. One can use the cURL to check the content and headers of responses and requests.

Multiple Transfers

With cURL, the transfers of multiple files are possible. At the same time, it can upload or download multiple files.

IPv6 Support

It gets connected to servers that use IPv6 addresses as cURL supports IPv6.

How to install cURL?

By default, the cURL gets installed in Linux distributions. To check whether the cURL is installed or not, follow the steps:

- Open the Linux console

- Enter 'cURL' and hit 'enter.'

- If the cURL is successfully installed, you'll see:

cURL: try

'cURL - - help'

Or 'cURL - - manual'

If the cURL is not installed, you'll get a message command not found.

How to use cURL for Web Scraping?

cURL is simple to use. But, still requires a user to follow the basic step-by-step guide. Follow the below-mentioned step serial-vise and get what you are looking forward to:

Make sure the cURL is successfully installed.

Select the website you want to scrape and ensure that the web scraping activities meet the regulations and local laws.

Write the cURL code and understand the code will be based on the website's structure and the data that needs to be extracted. For your knowledge, cURL includes:

- URL of the website

- The HTTP method (GET or POST)

- Headers in the request

- Data in the request

- Output format such as CSV or JSON

From the command-line interface, choose directory where your cURL was saved.

How to use cURL Command Data?

There are basic commands that need to be used to get started:

To Save the Web Page Content

cURL helps you save the content of a web page into a file by using the -o or -- output flag.

You need to write in such a format curl https://webpage.com -o output.html

The file in output.html will be saved in the current working directory. Use the -O (or--remote-name) if dealing with a file. The output will be written to a file name by the remote file name.

To Customize User-Agent

On some websites, the content is blocked or served various content types on the basis of the user agent. To unrestrict those restrictions, one must use the -A or - -user-agent flag to specify a custom user-agent string.

To Restore a Web Page

This is the most common cURL command. It involves an HTTP GET request for targeting a URL, which displays an entire web page, includes HTML content, and is displayed in the terminal window or the command prompt.

You need to write curl https://webpage.com

Follow the Redirects

Some websites use HTTP redirects and send users to different URLs. For making use of cURL, use -L or --location flag.

You need to write curl -L https://webpage.com

How to Configure cURL in Different Scenarios?

The cURL is powerful and can help in the advanced scenarios. Depending on the different situations, we've tried to help you what to do:

cURL with Proxies

If you prefer using cURL in conjunction with a proxy. Here are the benefits:

- To increase the ability to manage data requests from various geolocations successfully.

- Getting an infinite number of concrete data jobs that can be run simultaneously.

To use cURL with proxies, use '-x', and '(--- proxy)' abilities built into cURL. Example of the command line to integrate the proxy

$curl -x O62.940.66.2:8888 http://linux.com/

In the above snippet - '8888' is the port number's placeholder, and 'O62.940.66.2' is the IP address.

Custom Headers

To add custom headers, such as refer information, cookies, or other header fields, use the -H or --header flag:

curl -H "Cookie: key=value" -H "Referer: https://webpage.com" https://webpage.com/page

This command helps send requests with Referer headers and Cookies. It is more useful with HTTP requests for bypassing access information or complex browsing issues.

Handling Retries and Timeouts

For setting an infinite time for the request for completion, you need to use --max-time flag followed by the seconds:

curl --max-time 10 https://webpage.com

If you want cURL to reattempt in the case of a transient error, you can use the --retry flag followed by n number of times of reattempts:

curl --retry 3 https://webpage.com

HTTP Methods & Data

The different HTTP methods, such as POST, GET, DELETE, PUT, and more, are supported by cURL. If, in case, you want to specify methods instead of GET, use -X or -- request flag.

curl -X POST https://webpage.com/api/data

For POST requests, use -d or --data flag

For GET requests, use --data-urlencode flag

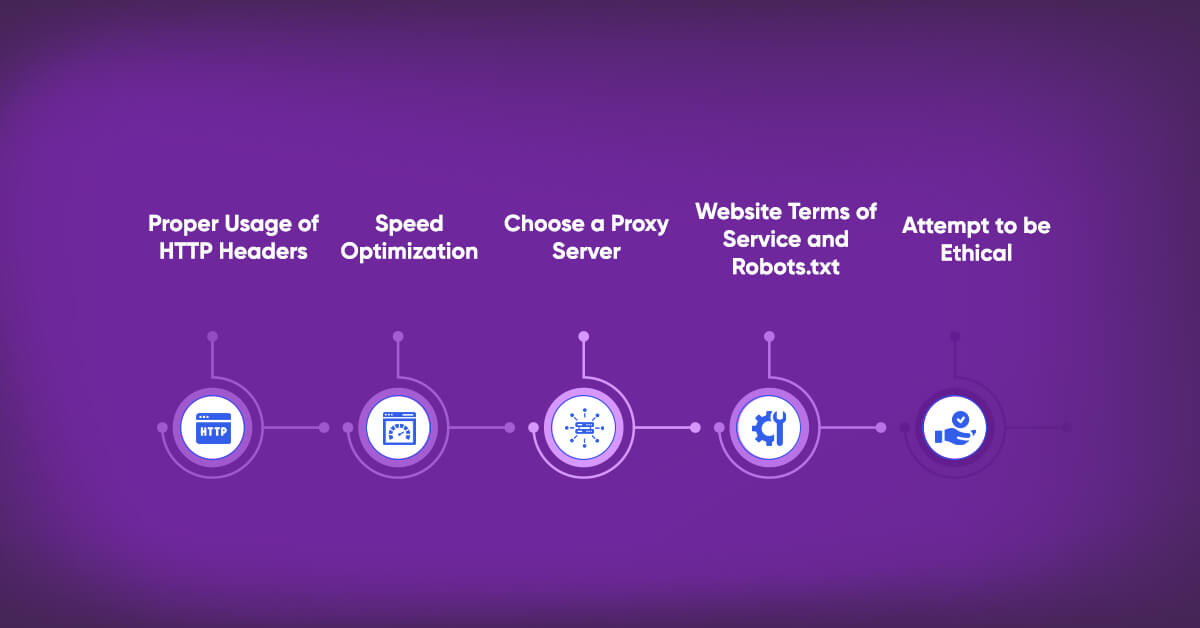

Practices for Web Scraping

cURL is the best tool for web scraping. To avoid any technical issues, it's important to use it correctly.

Proper Usage of HTTP Headers

Provide correct headers to prevent suspicious activities from the server, ensuring the data gets extracted accurately. Consider headers that include Accept-Encoding, Accept-Language, and User-Agent.

Speed Optimization

cURL is well known for its speed. There are various ways to optimize. The first way is to choose -s flag to save time by silencing the progress bar output. The next way is to use --a compressed flag to reduce response size and save bandwidth.

Choose a Proxy Server

The proxy server will help you hide your IP address from getting detected. This means there are fewer chances of getting banned by a website. This will further reduce the chances of any errors and improve the speed.

Website Terms of Service and Robots.txt

There is a high chances that the website's terms of service doesn't allow web scraping and ensures data is extracted. If you don't take this seriously, you might encounter legal errors.

Attempt to be Ethical

Use web scraping responsibly and ethically. This signifies no scraping of sensitive or private data. Or disrupting the normal functioning of the website.

In the End!

The cURL is no doubt beneficial for encountering difficulties. There are web scraping services available to combat the drawbacks. One such service can be availed by iwebscraping.com. The strategic integration handles the issues related to IP bans and anti-bot systems. Web scraping is simplified when cURL and iwebscraping services.